AI Digest: Transforming Repetitive Scatological Data Into Engaging Audio Content

Table of Contents

The Challenge of Scatological Data Analysis

Processing large volumes of scatological data presents unique difficulties. This type of data is often unstructured, inconsistent, and sensitive, requiring careful handling and sophisticated analytical techniques.

Data Cleaning and Preprocessing

The crucial first step is cleaning the raw data to remove noise and inconsistencies. This involves several preprocessing steps:

- Data Normalization and Standardization: Transforming data into a consistent format, ensuring uniformity across different sources.

- Removing Irrelevant Characters: Eliminating extraneous symbols, punctuation, or irrelevant text that can interfere with analysis.

- Handling Missing Values: Addressing gaps in the data through imputation techniques or removal, depending on the context.

- Data Transformation: Converting data into a suitable format for AI algorithms, for example, converting categorical data into numerical representations.

The Limitations of Traditional Methods

Manual analysis of vast quantities of scatological data is incredibly inefficient and prone to human error. Traditional statistical methods often struggle to uncover meaningful insights due to the inherent complexities and nuances of this data type.

- Time-Consuming: Manual review is extremely slow and impractical for large datasets.

- Subjective Interpretation: Human analysts may introduce bias into their interpretations.

- Scalability Issues: Traditional methods don't scale effectively to handle the ever-increasing volume of data.

- Difficulty in identifying subtle patterns: Human analysts may miss complex relationships hidden within the data.

Leveraging AI for Scatological Data Transformation

AI algorithms offer a powerful solution to the challenges posed by scatological data analysis. They can efficiently process large datasets, identify complex patterns, and transform the data into engaging audio content.

Natural Language Processing (NLP) Techniques

If the scatological data includes textual components, NLP techniques are crucial. These techniques can extract valuable information and insights.

- Sentiment Analysis: Determining the overall tone and emotion expressed in the text.

- Topic Modeling: Identifying recurring themes and topics within the data.

- Named Entity Recognition (NER): Extracting key entities, such as names, locations, and organizations.

- NLP Tools and Libraries: Popular tools include spaCy, NLTK, and Stanford CoreNLP.

Machine Learning for Pattern Recognition

Machine learning algorithms can identify patterns and trends within the data that would be easily missed by human analysts.

- Supervised Learning: Using labeled data to train models for specific tasks, such as classifying different types of scatological information.

- Unsupervised Learning: Discovering hidden patterns and structures in unlabeled data, for example, clustering similar entries together.

- Specific Models: Clustering algorithms (e.g., k-means, DBSCAN) and classification models (e.g., support vector machines, random forests) are particularly useful.

AI-Powered Audio Generation

AI can transform the analyzed data into various engaging audio formats.

- Text-to-Speech (TTS): Converting textual summaries or narratives into natural-sounding speech using advanced TTS engines.

- Audio Generation Tools: Platforms such as Amazon Polly, Google Cloud Text-to-Speech, and Microsoft Azure Text-to-Speech offer high-quality TTS capabilities.

- Podcast Creation: AI can assist in creating structured podcasts summarizing key findings or telling compelling stories based on the data.

- Data Reporting: Generating audio reports summarizing key trends and patterns identified within the scatological data.

Ethical Considerations and Data Privacy

Working with sensitive scatological data necessitates a strong emphasis on ethical considerations and data privacy.

Anonymization and Data Security

Protecting individual privacy is paramount.

- Data Anonymization Techniques: Removing or altering personally identifiable information before analysis.

- Data Encryption: Protecting data both in transit and at rest using strong encryption algorithms.

- Access Control: Limiting access to the data to authorized personnel only.

- Compliance with Regulations: Adhering to relevant data privacy regulations (e.g., GDPR, HIPAA).

Responsible AI Development

Responsible AI development is crucial to avoid bias and ensure fairness.

- Bias Mitigation Techniques: Employing techniques to identify and mitigate potential biases in the AI algorithms.

- Transparency and Explainability: Ensuring that the AI models are transparent and their decisions can be explained.

- Continuous Monitoring: Regularly monitoring the AI system for unexpected behavior or bias.

- Ethical Guidelines: Adhering to ethical guidelines for AI development and deployment.

Conclusion

AI is proving to be a powerful tool for transforming seemingly mundane data like repetitive scatological information into valuable and engaging audio content. By employing sophisticated AI techniques, we can not only efficiently analyze this type of data but also unlock insights that would be impossible to uncover through manual methods. Remember to prioritize ethical considerations and data privacy throughout the entire process. Start exploring the potential of AI to transform your repetitive scatological data today – the possibilities are as limitless as your imagination. Learn more about harnessing the power of AI digests for your specific needs and discover how to create compelling audio content from seemingly unworkable data.

Featured Posts

-

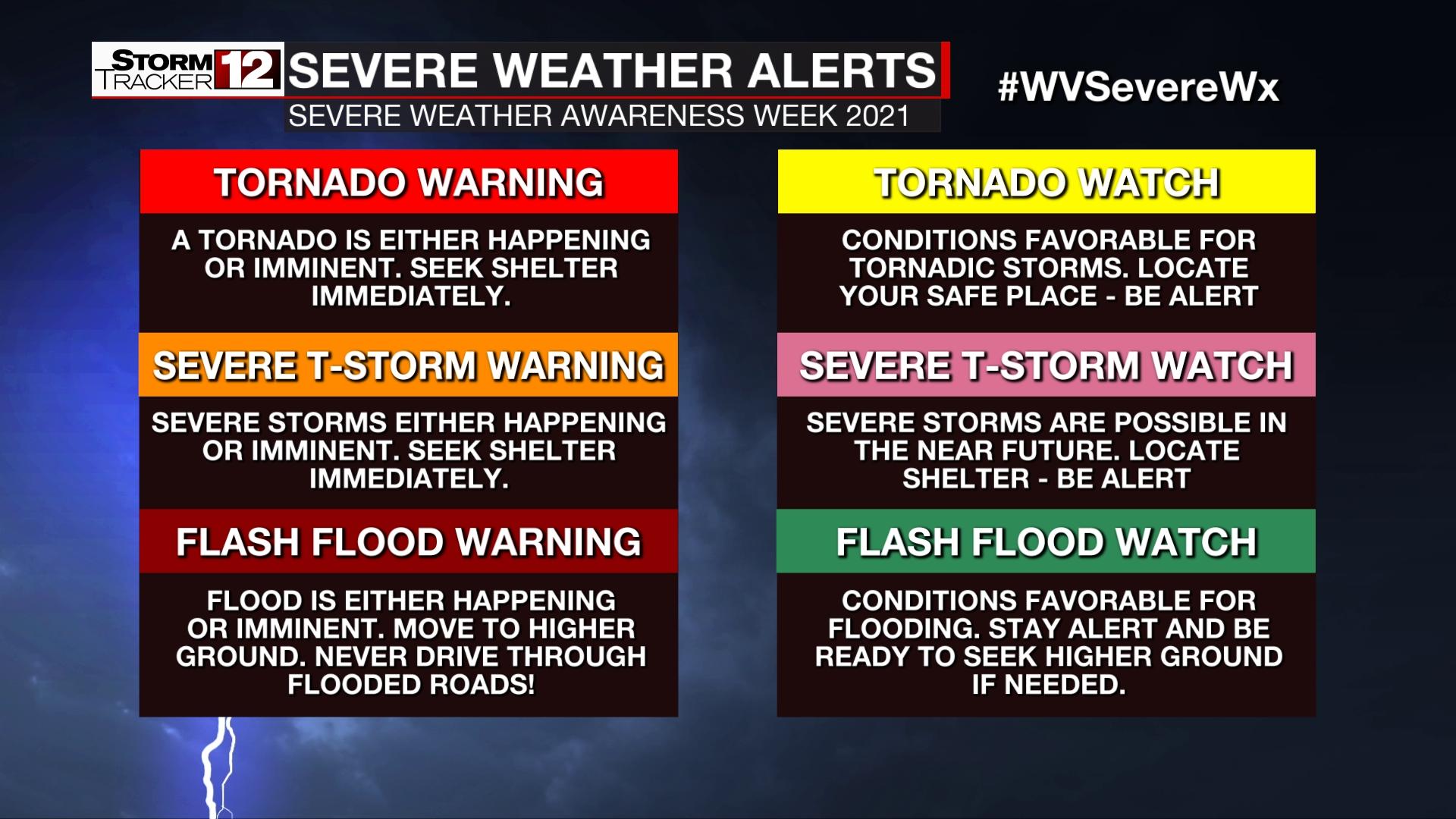

Active Vs Expired Storm Alerts Your Guide To Carolina Severe Weather Safety

May 31, 2025

Active Vs Expired Storm Alerts Your Guide To Carolina Severe Weather Safety

May 31, 2025 -

Kpc News Com Delving Into The Rich History Of Specific Location

May 31, 2025

Kpc News Com Delving Into The Rich History Of Specific Location

May 31, 2025 -

Munguia Denies Doping Allegations Following Adverse Test

May 31, 2025

Munguia Denies Doping Allegations Following Adverse Test

May 31, 2025 -

Ita Airways Official Airline Of The Giro D Italia 2025

May 31, 2025

Ita Airways Official Airline Of The Giro D Italia 2025

May 31, 2025 -

Munguias Doping Denial Examining The Adverse Test Result

May 31, 2025

Munguias Doping Denial Examining The Adverse Test Result

May 31, 2025