AI In Therapy: Privacy Concerns And The Potential For State Surveillance

Table of Contents

H2: Data Security and Confidentiality in AI-Powered Therapy Platforms

The sensitive nature of therapeutic data makes AI-powered therapy platforms particularly vulnerable to security breaches. The potential consequences of a data breach extend far beyond simple data loss; they can have devastating impacts on patients' lives.

H3: Data breaches and their potential consequences:

The healthcare sector has a history of high-profile data breaches, demonstrating the vulnerability of sensitive patient information. A breach of AI-powered therapy data would be particularly damaging due to the intimate and personal nature of the information involved.

- Examples of past data breaches in healthcare: The 2015 Anthem breach, affecting 80 million individuals, and the 2017 Equifax breach, compromising the personal data of nearly 150 million people, highlight the scale and severity of potential data breaches. These breaches demonstrate the devastating consequences of failing to adequately protect sensitive information. A similar breach affecting mental health data could lead to widespread identity theft, financial ruin, and severe emotional distress for vulnerable individuals.

- The legal and ethical ramifications of such breaches: Organizations are legally obligated to protect patient data under regulations like HIPAA in the US and GDPR in Europe. Breaches can result in significant fines, lawsuits, and reputational damage. Ethically, a breach represents a profound betrayal of trust, potentially discouraging individuals from seeking necessary mental healthcare.

- The potential for identity theft and emotional distress: The exposure of personal information, including diagnoses, treatment plans, and potentially even private communications, can lead to identity theft, financial fraud, and severe emotional distress for individuals already struggling with mental health challenges.

H3: Encryption and data anonymization techniques:

While encryption and data anonymization are crucial safeguards, they are not foolproof. Technological limitations and potential vulnerabilities exist, necessitating a multi-layered approach to data security.

- Different encryption methods and their strengths/weaknesses: Different encryption methods offer varying levels of protection. While strong encryption can significantly reduce the risk of unauthorized access, it's not impenetrable. Furthermore, the complexity of encryption can create challenges for data access even for legitimate users.

- The challenges of anonymizing data while preserving its utility for research and development: Anonymizing data is critical for protecting patient privacy, but it can also limit the data's usefulness for research and the development of improved AI-powered therapeutic tools. Striking the right balance between privacy and utility is a significant challenge.

- The role of regulatory compliance (HIPAA, GDPR, etc.): Strict adherence to data protection regulations, such as HIPAA in the US and GDPR in Europe, is paramount. These regulations provide a legal framework for data security and patient rights, but enforcement and adaptation to the rapid advancements in AI technology are ongoing challenges.

H2: Algorithmic Bias and its Impact on Vulnerable Populations

AI algorithms are trained on data, and if that data reflects existing societal biases, the algorithm will perpetuate and potentially amplify those biases. This is particularly concerning in mental healthcare, where bias can lead to unequal access to care and discriminatory outcomes.

H3: How algorithms can perpetuate existing inequalities:

AI systems, if not carefully designed and monitored, can inadvertently discriminate against certain demographic groups. This can manifest in various ways, from misdiagnosis to unequal access to treatment.

- Examples of bias in AI algorithms from other sectors: Studies have revealed biases in facial recognition technology, loan applications, and even criminal justice risk assessment tools, highlighting the pervasive nature of algorithmic bias. These examples underscore the urgent need for vigilance in the development of AI in healthcare.

- The impact of biased algorithms on access to mental health care for marginalized groups: Algorithmic bias can exacerbate existing health inequalities, potentially denying marginalized groups access to appropriate and effective mental health services. This could disproportionately affect racial minorities, individuals from low socioeconomic backgrounds, and members of the LGBTQ+ community.

- The need for diverse and representative datasets in AI development: To mitigate bias, AI algorithms need to be trained on diverse and representative datasets that accurately reflect the population they serve. This requires conscious effort to address data imbalances and ensure equitable representation in the development process.

H3: Lack of transparency and accountability:

Many AI algorithms operate as "black boxes," making it difficult to understand how they arrive at their conclusions. This lack of transparency makes it challenging to identify and correct biases.

- The challenge of explaining how an AI algorithm arrived at a particular decision: The opacity of some AI algorithms hinders accountability and makes it difficult to identify and address biases. This lack of transparency undermines trust and makes it difficult to rectify unfair or discriminatory outcomes.

- The need for greater transparency and accountability in the development and deployment of AI in therapy: There is a pressing need for greater transparency in the development and deployment of AI in therapy, enabling independent auditing and verification of fairness and accuracy.

- The role of regulatory bodies in ensuring fairness and equity: Regulatory bodies have a crucial role to play in ensuring fairness and equity in the development and deployment of AI in therapy. This includes establishing clear guidelines, promoting transparency, and enforcing accountability.

H2: The Potential for State Surveillance and Erosion of Patient Rights

The potential for governments to access and misuse data collected through AI-powered therapy platforms raises serious concerns about state surveillance and the erosion of patient rights.

H3: Government access to sensitive patient data:

The sensitive nature of mental health data makes it a potentially valuable target for surveillance. Legal frameworks governing data access vary across jurisdictions, but the potential for misuse exists.

- Legal frameworks governing data access by law enforcement and intelligence agencies: Legal frameworks governing access to patient data by government agencies are complex and vary widely across jurisdictions. There is a need for clear and consistent legislation to protect patient confidentiality while balancing legitimate national security interests.

- The potential for misuse of data for political or social control: The misuse of mental health data for political or social control is a serious concern. Data could be used to identify and target individuals based on their beliefs, affiliations, or mental health status.

- The chilling effect on patients' willingness to seek help: The fear of government surveillance could deter individuals from seeking necessary mental healthcare, exacerbating existing mental health challenges and hindering access to vital services.

H3: Balancing national security with individual liberties:

Balancing national security concerns with individual liberties is a complex ethical and legal challenge. Robust legal protections and public debate are essential to ensure responsible use of AI in therapy.

- Arguments for and against government access to mental health data: The debate about government access to mental health data involves balancing the potential benefits for national security with the risks to individual privacy and freedom of expression. Strong arguments exist on both sides, highlighting the complexity of the issue.

- The importance of robust legal protections and oversight: Robust legal protections and independent oversight mechanisms are critical to safeguarding patient rights and preventing the misuse of sensitive data.

- The need for public debate and engagement: Open and informed public debate is crucial to shape policies that effectively protect patient privacy while allowing for responsible innovation in the field of AI in therapy.

3. Conclusion:

The use of AI in therapy presents significant opportunities to improve mental healthcare access and effectiveness, but it also raises serious concerns about patient privacy, data security, algorithmic bias, and the potential for state surveillance. Addressing these concerns requires a multi-faceted approach, including strong data protection regulations, transparent and accountable AI development practices, and robust mechanisms to prevent government overreach. The potential benefits of AI in therapy should not come at the cost of individual liberties and fundamental human rights.

The use of AI in therapy holds immense potential, but realizing this potential requires a robust commitment to protecting patient privacy and preventing state surveillance. We must demand transparency, accountability, and strong legal safeguards to ensure that AI in therapy serves humanity, not jeopardizes it. Let's continue the conversation and advocate for responsible innovation in AI in therapy. The future of mental healthcare depends on it.

Featured Posts

-

Paddy Pimblett Questions Poiriers Ufc Retirement Should He Stay Or Go

May 16, 2025

Paddy Pimblett Questions Poiriers Ufc Retirement Should He Stay Or Go

May 16, 2025 -

Greenlands Ice Uncovering The U S Military Bases History

May 16, 2025

Greenlands Ice Uncovering The U S Military Bases History

May 16, 2025 -

Hyeseong Kim James Outman And Matt Sauer Top Dodgers Prospects To Watch

May 16, 2025

Hyeseong Kim James Outman And Matt Sauer Top Dodgers Prospects To Watch

May 16, 2025 -

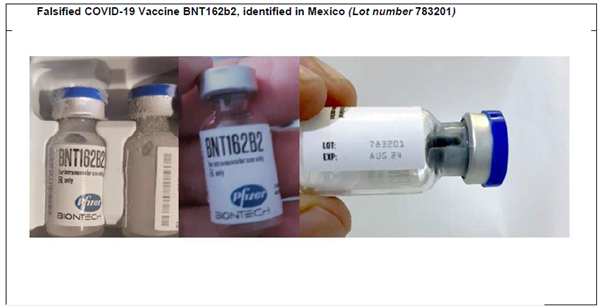

Pandemic Fraud Lab Owner Convicted Of Falsified Covid Test Results

May 16, 2025

Pandemic Fraud Lab Owner Convicted Of Falsified Covid Test Results

May 16, 2025 -

Unexpected Drop In Pbocs Yuan Support

May 16, 2025

Unexpected Drop In Pbocs Yuan Support

May 16, 2025