AI Therapy: A Necessary Evil Or A Tool For State Surveillance?

Table of Contents

The Promise of AI Therapy

AI therapy offers a transformative potential for mental healthcare, particularly in addressing key challenges related to accessibility and affordability, personalized treatment, and early intervention.

Accessibility and Affordability

AI therapy can significantly expand access to mental healthcare, particularly in underserved areas. Traditional therapy often faces barriers like high costs, geographical limitations, and long wait times. AI-powered solutions offer a compelling alternative.

- Reduced costs: AI-driven platforms can significantly lower the cost of therapy, making it accessible to a wider population. This is particularly crucial for individuals with limited financial resources.

- 24/7 availability: Unlike human therapists, AI-powered systems are available anytime, anywhere, offering immediate support to those in crisis. Teletherapy solutions leverage this accessibility through remote mental health services.

- Personalized treatment plans: AI algorithms can analyze individual needs and preferences, tailoring treatment plans for optimal effectiveness, creating more cost-effective treatment options.

Personalized Treatment

AI algorithms are capable of analyzing vast datasets to identify patterns and trends related to mental health conditions. This data-driven approach allows for personalized treatment plans that adapt to individual needs and responses.

- Data-driven insights: AI can analyze patient data, such as mood tracking, sleep patterns, and therapy session notes, to provide personalized insights and recommendations. This leads to AI-driven diagnostics which can be more accurate and quicker.

- Customized interventions: AI-powered systems can offer customized interventions, including cognitive behavioral therapy (CBT) modules, mindfulness exercises, and relaxation techniques, tailored to specific needs. This signifies a step toward truly adaptive treatment that can change and grow with the individual.

- Adaptive learning: AI algorithms can continuously learn and adapt based on patient responses, refining treatment plans for optimal efficacy.

Early Intervention and Prevention

AI's ability to analyze data allows for the early detection of mental health issues before they escalate into more serious conditions. This proactive approach can significantly improve outcomes and reduce the need for intensive interventions later on.

- Early warning signs detection: AI can identify patterns and indicators that suggest potential mental health issues, even before they become clinically apparent. This can enable mental health screening in a non-invasive way.

- Proactive interventions: Early identification allows for prompt interventions, such as targeted therapeutic exercises or connecting individuals with appropriate support resources.

- Reduced hospitalization rates: Early intervention through AI-powered tools can potentially reduce hospitalizations and improve overall mental health outcomes, leading to better preventative care.

The Dark Side: Concerns about Surveillance and Data Privacy

While AI therapy offers significant potential, there are serious ethical and practical concerns related to data security, algorithmic bias, and the potential for state surveillance.

Data Security and Breaches

Storing sensitive mental health data in AI systems presents substantial risks. Data breaches can have devastating consequences, leading to the exposure of highly personal and confidential information.

- Data encryption: Robust data encryption and security protocols are crucial to protect patient data from unauthorized access.

- Cybersecurity threats: AI systems are vulnerable to cyberattacks, requiring stringent cybersecurity measures to prevent breaches. Compliance with regulations such as HIPAA (in the US) and GDPR (in Europe) is paramount.

- Patient confidentiality: Maintaining patient confidentiality is paramount, and strong legal and ethical frameworks are needed to ensure the responsible use of data.

Algorithmic Bias and Discrimination

AI algorithms are trained on data, and if that data reflects existing societal biases, the algorithms will perpetuate and even amplify these biases. This can lead to unfair or discriminatory outcomes in therapy.

- Algorithmic fairness: Ensuring algorithmic fairness requires careful consideration of data representation and bias mitigation techniques.

- Representation in datasets: Datasets used to train AI algorithms must be representative of diverse populations to avoid perpetuating existing biases.

- Potential for bias amplification: AI systems can unintentionally amplify existing biases, leading to discriminatory outcomes in access to and the provision of care. Algorithmic accountability is crucial to avoid this.

Potential for State Surveillance and Abuse

The potential for governments or other entities to misuse AI therapy data for surveillance or control is a significant ethical concern.

- Data collection without consent: The collection of mental health data without informed consent is a violation of fundamental privacy rights.

- Profiling and targeting: AI systems could be used to profile individuals and target them based on their mental health status, potentially leading to discrimination or oppression. This raises serious ethical considerations regarding responsible AI development.

- Erosion of privacy rights: The widespread use of AI therapy without appropriate safeguards could lead to a significant erosion of privacy rights.

Conclusion

AI therapy holds immense promise for revolutionizing mental healthcare, offering increased accessibility, personalized treatment, and opportunities for early intervention. However, the potential for data breaches, algorithmic bias, and state surveillance necessitates careful consideration and robust ethical guidelines. The future of AI therapy hinges on our ability to navigate its ethical complexities. Let's ensure that this powerful technology serves humanity, not as a tool for oppression, but as a vital resource for accessible and effective mental healthcare. Join the conversation about responsible AI therapy and help shape its future!

Victoria De Portugal Ante Belgica 0 1 Repaso Al Partido

Victoria De Portugal Ante Belgica 0 1 Repaso Al Partido

Anthony Edwards And The Baby Mama Drama A Twitter Firestorm

Anthony Edwards And The Baby Mama Drama A Twitter Firestorm

First Up Bangladesh Yunus In China Rubios Caribbean Trip And More Top News Today

First Up Bangladesh Yunus In China Rubios Caribbean Trip And More Top News Today

A 48 Year Mystery Star Wars Hints At A Long Teasd Planet

A 48 Year Mystery Star Wars Hints At A Long Teasd Planet

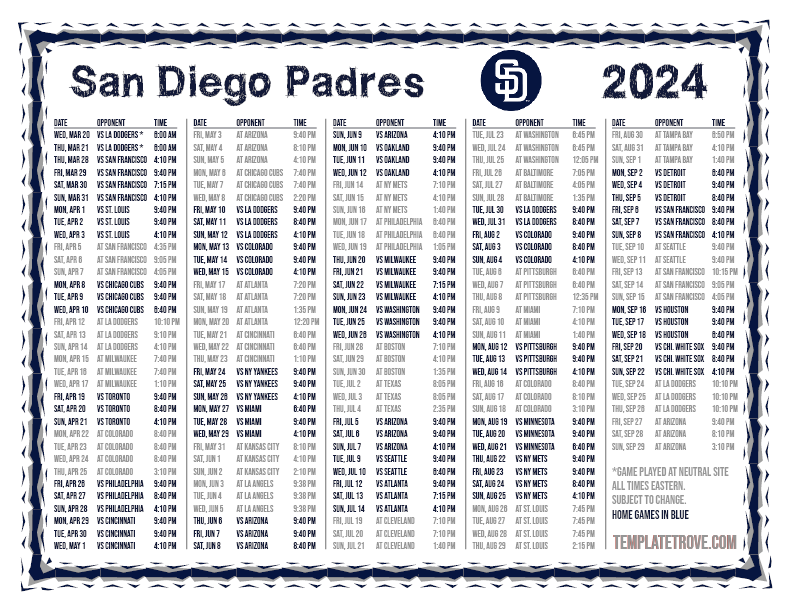

Padres 2025 Season A Path To Wrigley Field

Padres 2025 Season A Path To Wrigley Field