AI's Limitations: Why It Doesn't Learn And How To Use It Responsibly

Table of Contents

AI's Lack of True Understanding and Generalization

AI, despite its impressive capabilities, fundamentally differs from human intelligence in its approach to learning. It lacks the nuanced understanding and common sense reasoning that humans possess. This limitation stems from two key factors: its inability to truly grasp causal relationships and its limited capacity for generalization.

The Difference Between Correlation and Causation

AI excels at identifying correlations within data – recognizing patterns and associations between different variables. However, it often struggles to discern the underlying causal relationships. This is a critical distinction.

- Example: An AI model might identify a strong correlation between ice cream sales and crime rates. It might even predict an increase in crime based on rising ice cream sales. However, it wouldn't understand the underlying causal factor: both increase during the summer months due to warmer weather and increased outdoor activity. The AI is simply identifying a correlation, not understanding the causal link.

- The limitations of statistical methods often underpin this issue. While powerful for identifying patterns, statistical analysis alone cannot establish causality.

- AI lacks the real-world context and common-sense reasoning necessary to interpret correlations accurately and infer causality.

Limited Generalization Capabilities

AI models are trained on specific datasets. While they can perform exceptionally well within the parameters of their training data, they often struggle to generalize their knowledge to new, unseen data, even if that data is related.

- Data diversity is crucial for building AI models capable of generalization. Training on a diverse and representative dataset significantly improves a model's ability to handle various scenarios.

- Numerous examples exist of AI systems failing to generalize to new scenarios. A self-driving car trained on sunny California roads might perform poorly in snowy conditions.

- Robust testing and validation across diverse datasets are essential to mitigate this limitation and ensure the reliable performance of AI systems.

The Data Dependency of AI

AI's capabilities are inextricably linked to the data it's trained on. This dependency creates significant challenges, including bias amplification and the "black box" problem.

Bias in Training Data

If training data reflects existing societal biases, the resulting AI system will likely perpetuate and even amplify those biases. This can lead to unfair or discriminatory outcomes.

- Examples: Facial recognition systems exhibiting bias against people of color, loan application algorithms displaying gender bias, and AI-powered hiring tools discriminating against certain demographic groups.

- Techniques like data augmentation, re-weighting, and adversarial training can help mitigate bias in training data.

- The use of diverse and representative datasets is crucial to minimize bias and ensure fairness in AI systems.

The "Black Box" Problem

Many sophisticated AI models, particularly deep learning models, function as "black boxes." Their decision-making processes are opaque and difficult to understand, making it challenging to identify and correct errors.

- Interpreting the inner workings of deep learning models is a significant challenge for researchers and developers.

- Explainable AI (XAI) techniques aim to address this issue by making AI models more transparent and interpretable.

- The ethical implications of opaque AI systems are substantial, raising concerns about accountability and trust.

Responsible AI Deployment: Mitigating AI's Limitations

To harness the potential of AI while minimizing its risks, a responsible approach is crucial. This involves acknowledging AI's limitations and implementing strategies to mitigate potential harm.

Human Oversight and Intervention

Human involvement is essential throughout the AI lifecycle, from data collection and model training to deployment and ongoing monitoring.

- Human-in-the-loop systems, where humans are involved in the decision-making process, can help to mitigate the risks of biased or erroneous AI outputs.

- Continuous monitoring and evaluation of AI systems are necessary to identify and address potential problems.

- Clear guidelines, accountability frameworks, and ethical considerations must be integrated into the development and deployment of AI systems.

Transparency and Explainability

Developing and deploying more transparent and explainable AI models is paramount to building trust and ensuring accountability.

- Techniques like feature importance analysis, rule extraction, and visualization can enhance model interpretability.

- Regulatory frameworks for responsible AI development are emerging, aiming to establish guidelines and standards for ethical AI practices.

- Ethical considerations must be central to AI design and implementation, ensuring fairness, accountability, and transparency.

Conclusion

AI's limitations stem from its reliance on data and algorithms, its lack of true understanding and common sense, and its inability to perfectly generalize. Responsible AI development requires acknowledging these limitations and proactively implementing strategies to mitigate potential risks. By understanding AI's limitations and actively participating in the development of responsible AI, we can harness its potential while mitigating its weaknesses and ensuring a more equitable and beneficial future. Let's continue the conversation about building a future where AI serves humanity responsibly.

Featured Posts

-

Receta De Lasana De Calabacin La Version Facil De Pablo Ojeda En Mas Vale Tarde

May 31, 2025

Receta De Lasana De Calabacin La Version Facil De Pablo Ojeda En Mas Vale Tarde

May 31, 2025 -

Neuer Anstieg Entwicklung Des Bodensee Wasserstands Im Detail

May 31, 2025

Neuer Anstieg Entwicklung Des Bodensee Wasserstands Im Detail

May 31, 2025 -

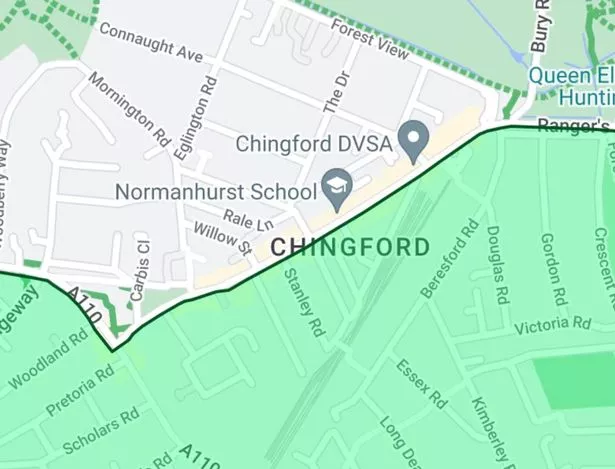

Large Scale Fire On East London High Street Requires 100 Firefighters

May 31, 2025

Large Scale Fire On East London High Street Requires 100 Firefighters

May 31, 2025 -

Parker Meadows Return Tigers Notebook Update

May 31, 2025

Parker Meadows Return Tigers Notebook Update

May 31, 2025 -

Unraveling The Mystery Donald Trump And His Overweight Friend

May 31, 2025

Unraveling The Mystery Donald Trump And His Overweight Friend

May 31, 2025