ChatGPT And OpenAI Face FTC Investigation: Privacy And AI Concerns

Table of Contents

The FTC Investigation: What We Know So Far

The FTC's investigation into OpenAI and ChatGPT is a significant development in the ongoing debate surrounding AI ethics and data privacy. While the specifics are still unfolding, the investigation's scope is broad, focusing on potential violations of various consumer protection laws. The FTC is reportedly scrutinizing OpenAI's practices related to data security, algorithmic bias, and the potential harms caused by the AI model.

The alleged issues under scrutiny include:

- Data security breaches and vulnerabilities in ChatGPT: Reports suggest potential vulnerabilities in ChatGPT's security infrastructure, raising concerns about unauthorized access to user data. This includes the potential leakage of sensitive personal information.

- Insufficient safeguards to protect children's data: The FTC is likely investigating whether OpenAI has implemented adequate measures to protect the data of children using ChatGPT, a particularly vulnerable demographic. The collection and use of children's data are subject to stricter regulations under laws like COPPA (Children's Online Privacy Protection Act).

- Potential violations of consumer protection laws: The FTC is examining whether OpenAI's data practices comply with various consumer protection laws, including those related to transparency, consent, and data security. Failure to meet these standards could result in significant penalties.

- Concerns surrounding biased and harmful outputs from the AI model: ChatGPT, like many large language models (LLMs), has been shown to generate biased or harmful content. The FTC is likely investigating whether OpenAI has taken sufficient steps to mitigate these risks and protect users from potential harm.

The potential penalties OpenAI faces are substantial, ranging from hefty fines to mandatory changes in its data handling practices and even restrictions on its AI development activities.

Privacy Concerns Surrounding ChatGPT and Similar AI Models

Large language models (LLMs) like ChatGPT inherently present significant privacy risks. Their ability to process and generate human-like text relies on vast amounts of data, raising concerns about how this data is collected, used, and protected.

Key privacy issues surrounding ChatGPT and similar AI models include:

- Data collection practices and the type of user data collected: ChatGPT collects a significant amount of user data, including conversational data, personal information shared during interactions, and potentially metadata associated with usage patterns.

- Data usage and sharing with third parties: The investigation will scrutinize how OpenAI uses this collected data and whether it's shared with third-party companies, potentially violating user privacy and trust.

- The potential for data breaches and misuse of personal information: The sheer volume of data processed by ChatGPT makes it a prime target for cyberattacks. A data breach could expose sensitive personal information to malicious actors.

- Lack of transparency regarding data handling procedures: Concerns exist regarding the lack of transparency in OpenAI's data handling procedures. Users may not fully understand how their data is being collected, used, and protected.

- The use of user data for training the AI model and its implications: The training of LLMs like ChatGPT involves feeding the model massive datasets, including user-generated content. This raises ethical questions about the implicit consent users provide and the potential for their data to be used in unforeseen ways.

The Role of User Data in Training AI Models

The training of AI models like ChatGPT relies heavily on vast datasets of text and code. This data is often sourced from publicly available sources, but it can also include user-generated content from platforms like ChatGPT itself. This raises ethical concerns about data ownership, consent, and the potential for data bias to be amplified within the model.

"Data poisoning," where malicious actors intentionally introduce biased or harmful data into training datasets, is a significant concern. This can lead to the AI model generating biased, inaccurate, or even harmful outputs. Therefore, data anonymization and privacy-preserving techniques are crucial to ensure the ethical and responsible development of AI models.

The Broader Implications for the AI Industry

The FTC's investigation into OpenAI will likely have far-reaching implications for the entire AI industry. It sets a precedent for how regulators will approach the scrutiny of powerful AI technologies and emphasizes the need for greater transparency and accountability.

Potential consequences for the AI industry include:

- Increased scrutiny of AI development practices: We can expect increased regulatory oversight and audits of AI companies' data handling practices and ethical considerations in AI development.

- Slowdown in AI innovation due to increased regulation: More stringent regulations might temporarily slow down the pace of AI innovation, especially in areas involving sensitive data. However, responsible regulations are crucial for building user trust and ensuring ethical development.

- Changes in data handling practices across the AI industry: Other AI companies will likely review and revise their data handling practices to minimize the risk of facing similar investigations.

- Increased focus on AI ethics and responsible AI development: This investigation highlights the importance of incorporating ethical considerations and privacy protection into every stage of AI development.

Conclusion:

The FTC's investigation into ChatGPT and OpenAI underscores the critical need for increased transparency, accountability, and ethical considerations in AI development. The investigation highlights significant privacy concerns surrounding ChatGPT and similar AI models, emphasizing the potential risks associated with data collection, usage, and security. The outcome of this investigation will significantly shape the future of AI regulation and influence how AI companies approach data privacy and responsible AI development. Stay informed about the FTC's investigation into ChatGPT and OpenAI and the evolving landscape of AI regulation. Understanding the privacy implications of ChatGPT and similar AI tools is crucial for navigating this rapidly changing technological world. Continue to follow updates on the OpenAI/FTC case to understand the future of AI and its regulation.

Featured Posts

-

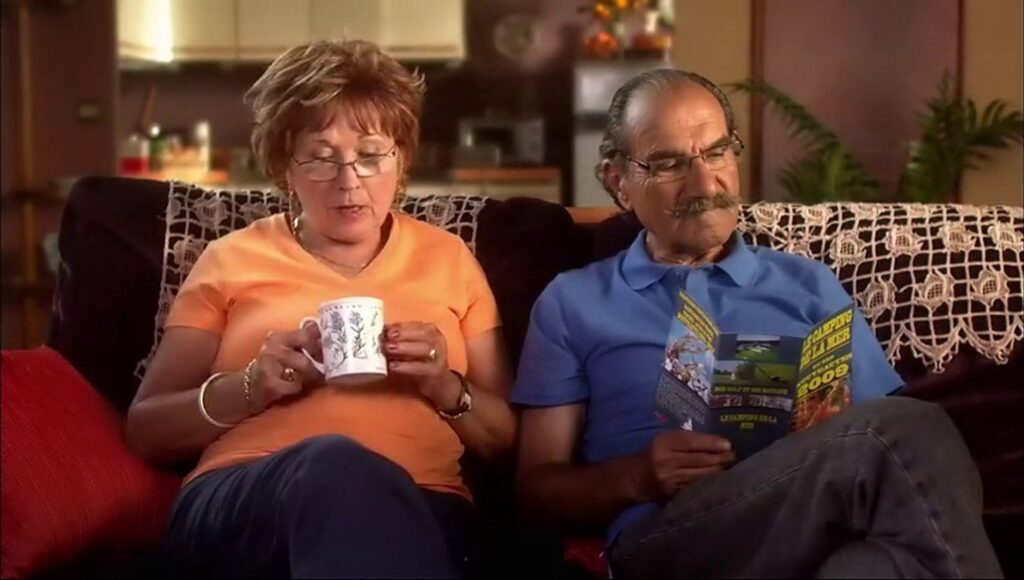

L Incroyable Complicite De Gerard Hernandez Et Chantal Ladesou Dans Scenes De Menages

May 11, 2025

L Incroyable Complicite De Gerard Hernandez Et Chantal Ladesou Dans Scenes De Menages

May 11, 2025 -

Avestruz Ataca A Boris Johnson En Texas La Reaccion Del Exprimer Ministro

May 11, 2025

Avestruz Ataca A Boris Johnson En Texas La Reaccion Del Exprimer Ministro

May 11, 2025 -

Massive Office365 Data Breach Results In Multi Million Dollar Loss

May 11, 2025

Massive Office365 Data Breach Results In Multi Million Dollar Loss

May 11, 2025 -

Asi Se Jugara El Campeonato Uruguayo De Segunda Division 2025

May 11, 2025

Asi Se Jugara El Campeonato Uruguayo De Segunda Division 2025

May 11, 2025 -

Belal Muhammad Vs Jack Della Maddalena To Headline Ufc 315 Complete Main Card Breakdown

May 11, 2025

Belal Muhammad Vs Jack Della Maddalena To Headline Ufc 315 Complete Main Card Breakdown

May 11, 2025