Controversy Surrounds MIT Student's AI Research Paper

Table of Contents

The Core Claims of the MIT Student's Research

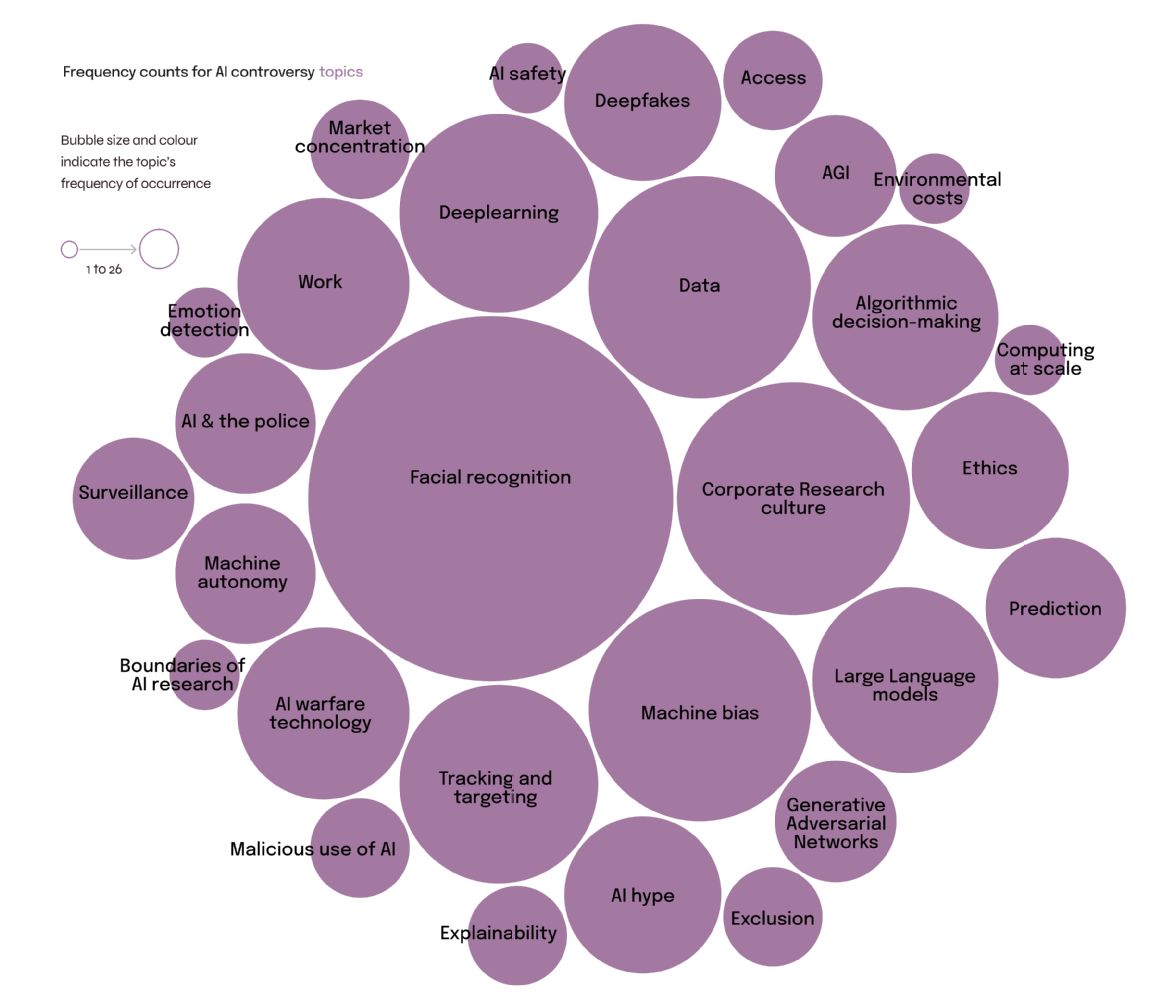

The MIT student's research, titled "Algorithmic Bias in Facial Recognition: A Case Study," presented compelling evidence of systematic bias within commonly used facial recognition algorithms. The study focused on the accuracy rates of these algorithms across different demographic groups, particularly concerning race and gender. The core methodology involved testing several commercially available algorithms against a large dataset of facial images representing diverse populations.

-

Specific claim 1: The study demonstrated significantly higher error rates for individuals with darker skin tones compared to lighter-skinned individuals, highlighting racial bias embedded within the algorithms. This disparity in accuracy levels directly impacts the fairness and reliability of these technologies in real-world applications.

-

Specific claim 2: The research also uncovered a gender bias, indicating that the algorithms performed less accurately in identifying women compared to men, regardless of skin tone. This further underscores the need for more comprehensive and equitable AI development practices.

-

Description of the research methodology: The researcher employed a rigorous testing procedure, comparing the performance of different algorithms across a diverse range of demographics. This involved a carefully curated dataset to mitigate existing biases present in publicly available datasets. Statistical analysis was then performed to quantify the observed disparities in accuracy.

-

Mention any novel techniques or approaches: The study introduced a novel approach to visualizing and quantifying algorithmic bias, offering a clearer understanding of its impact on different demographic groups. This visualization technique provided a powerful tool for communicating the findings to both technical and non-technical audiences.

The Nature of the Controversy: Ethical Concerns and Criticisms

The publication of this research immediately sparked a heated debate, primarily due to its implications for the ethical use of AI and the potential for perpetuating societal inequalities.

Ethical Implications:

-

Specific ethical concern 1 and its implications: The documented bias in facial recognition algorithms raises serious ethical concerns about their use in law enforcement, border control, and other high-stakes applications. False positives and inaccurate identifications disproportionately affect marginalized communities, potentially leading to wrongful arrests, deportations, or other forms of discrimination.

-

Specific ethical concern 2 and potential consequences: The widespread deployment of biased algorithms could further exacerbate existing societal inequalities by reinforcing prejudiced stereotypes and limiting opportunities for individuals from certain demographic groups.

-

Examples of how the research could be misused: The findings could be exploited to justify discriminatory practices or to create more sophisticated tools for surveillance and profiling.

Methodological Critique:

-

Specific criticism 1 of the methodology: Some critics have questioned the representativeness of the dataset used in the study, suggesting that it might not accurately reflect the diversity of the global population. This critique highlights the challenges in constructing unbiased datasets for AI research.

-

Specific criticism 2 regarding data collection or analysis: Concerns have been raised regarding potential biases in the data collection process or the statistical methods employed to analyze the results. Some argue that the study might have overlooked other relevant factors contributing to the observed disparities.

-

Potential flaws in the study design or interpretation: Some researchers have pointed out potential flaws in the study's design, which could lead to an overestimation of the observed bias. Further research and independent verification are crucial to validate the findings.

Responses from the AI Community and MIT

The AI community's response to the paper has been mixed, with some researchers praising its contribution to the ongoing debate about AI ethics while others remain critical of its methodology. Several prominent AI researchers have called for greater transparency and accountability in the development and deployment of facial recognition technologies.

-

Statements or actions from other researchers in response to the paper: Many researchers have echoed the concerns raised in the study, calling for stricter regulations and guidelines for the use of AI systems in sensitive applications. Some are actively working on developing less biased algorithms.

-

MIT's official stance on the controversy and any measures taken: MIT has yet to release an official statement regarding the controversy. However, the university has a strong commitment to ethical AI research, and it is likely that they will address the concerns raised by the study in due course.

-

Public opinion and media coverage of the debate: The controversy surrounding the paper has generated significant media attention, raising public awareness about the potential risks of biased AI systems. This has contributed to a growing public demand for greater transparency and accountability in the development and use of AI technologies.

The Broader Implications for the Future of AI Research

This controversy highlights the urgent need for a more ethical and responsible approach to AI research and development.

-

Potential changes in research practices or ethical guidelines: The debate surrounding this research could lead to changes in research practices, with a greater emphasis on the ethical implications of AI systems and the development of more robust and equitable algorithms. This may also result in new ethical guidelines for AI research.

-

Impact on funding and future AI projects: The controversy might influence funding decisions for future AI projects, prioritizing those with a strong focus on fairness, transparency, and ethical considerations. Funding bodies could introduce new requirements for ethical review processes.

-

Effects on public trust in AI technology: The controversy could erode public trust in AI technology, particularly concerning its use in sensitive applications. Restoring this trust requires transparency, accountability, and a commitment to developing and deploying AI systems that are both effective and ethical.

Conclusion

The controversy surrounding this MIT student's AI research paper underscores the critical need for ongoing dialogue and ethical considerations in the field of artificial intelligence. The documented bias in facial recognition algorithms, along with the methodological critiques, highlights the importance of robust ethical frameworks and rigorous methodology in AI research. This case serves as a stark reminder of the potential for AI to perpetuate and amplify existing societal biases, necessitating a proactive and responsible approach to its development and deployment. Let’s continue the conversation around responsible MIT AI research and the ethical implications of groundbreaking advancements in the field. Learn more about the latest developments in this ongoing debate by [link to relevant resources or further reading].

Featured Posts

-

Marcello Hernandez Explains The Clasp Clarifying The Dying For Sex Scene With Michelle Williams

May 18, 2025

Marcello Hernandez Explains The Clasp Clarifying The Dying For Sex Scene With Michelle Williams

May 18, 2025 -

Analiza Zasiegu Publikacji Jacka Harlukowicza Na Onecie W 2024

May 18, 2025

Analiza Zasiegu Publikacji Jacka Harlukowicza Na Onecie W 2024

May 18, 2025 -

Nyt Mini Crossword Clues And Answers March 6 2025

May 18, 2025

Nyt Mini Crossword Clues And Answers March 6 2025

May 18, 2025 -

Pedro Pascal Reveals Feelings For Jennifer Aniston Following Dinner Date

May 18, 2025

Pedro Pascal Reveals Feelings For Jennifer Aniston Following Dinner Date

May 18, 2025 -

Why Are Las Vegas Casinos Laying Off Workers A Look At The Recent Job Losses

May 18, 2025

Why Are Las Vegas Casinos Laying Off Workers A Look At The Recent Job Losses

May 18, 2025