Did The BBC Create A Deepfake Agatha Christie?

Table of Contents

Examining the Evidence: Visual and Audio Analysis of the Suspected Deepfake

The alleged deepfake centers around a short video clip circulating online, purportedly showing Agatha Christie discussing her writing process. To determine its authenticity, a thorough forensic analysis is necessary. Let's examine the visual and audio cues:

Visual Analysis:

- Facial Inconsistencies: Close examination reveals potential inconsistencies in facial expressions and micro-movements. The subtle twitches and blinks don't quite align with the known behavior of Agatha Christie in authentic footage.

- Unnatural Movements: The subject's head movements and overall body language seem slightly stiff and unnatural, a common giveaway in less sophisticated deepfakes.

- Comparison with Authentic Footage: A direct comparison with verified Agatha Christie videos reveals noticeable discrepancies in skin texture, lighting, and the overall quality of the image. This comparison is crucial for deepfake detection.

Audio Analysis:

- Voice Inconsistencies: The voice in the clip exhibits slight inconsistencies in tone and intonation compared to verified Agatha Christie audio recordings.

- Voice Cloning Technology: Advanced voice cloning technology is capable of producing remarkably realistic imitations. Detecting such manipulation requires specialized tools and expertise in audio forensic analysis. The slight inconsistencies, however, may indicate less sophisticated technology was used.

- Comparison with Authentic Recordings: Analyzing the audio spectrum and comparing it with known Agatha Christie recordings can reveal subtle differences in pitch, timbre, and vocal characteristics.

These visual and audio cues are essential in deepfake detection, relying heavily on forensic analysis and expert comparison with genuine materials. The potential for manipulation using AI deepfake technology should not be underestimated, making a detailed analysis crucial.

The BBC's Response and Official Statement on the Allegations

At the time of writing, the BBC has yet to issue a formal public statement directly addressing the deepfake allegations. The absence of a clear, definitive response fuels speculation and uncertainty. A strong, transparent response from the BBC would be vital in addressing concerns and maintaining public trust. This silence allows for continued dissemination of misinformation. A prompt, detailed statement that addresses the allegations head-on would be crucial in dispelling doubt. The lack of an official response raises questions about the BBC's internal investigation and its commitment to media transparency. Keywords like "BBC statement," "official response," and "media transparency" are vital in understanding this aspect of the situation.

The Technology Behind Potential Deepfake Creation: How Realistic is it?

Creating a realistic deepfake requires sophisticated deepfake technology, specifically AI deepfake creation tools and techniques. The process typically involves:

- Data Acquisition: Gathering a substantial amount of high-quality video and audio footage of Agatha Christie.

- Facial Reconstruction: Utilizing machine learning algorithms to map and recreate Christie's facial features in 3D.

- AI Training: Training deep learning models on this data to generate realistic video and audio outputs.

The feasibility of creating a convincing deepfake of Agatha Christie depends on the availability of suitable source material. The more archival footage exists, the more realistic the result can be. The current state of deepfake technology makes creating a high-quality deepfake possible, though imperfections may still be present depending on the resources and expertise involved. However, even subtle imperfections can be a telltale sign during forensic analysis. Keywords such as "deepfake technology," "AI deepfake creation," "machine learning," and "facial reconstruction" are fundamental in understanding the technical capabilities behind this.

The Implications of a Potential BBC Deepfake Agatha Christie

The implications of a potential BBC deepfake of Agatha Christie are far-reaching. The use of such technology to create fake media content raises serious ethical implications:

- Erosion of Public Trust: The deliberate creation and dissemination of a deepfake would severely damage the BBC's reputation and erode public trust in media outlets.

- Media Credibility Crisis: Such an act would contribute to a broader crisis of media credibility, making it harder to discern truth from fabrication.

- Legal Ramifications: Copyright infringement and potential defamation lawsuits could arise depending on the content and use of the deepfake. Misinformation spread through deepfakes can also have significant legal consequences.

- Disinformation and Misinformation: Deepfakes contribute to the spread of disinformation and misinformation, which can have serious consequences for political processes and social cohesion. Keywords like "ethical implications," "media ethics," "misinformation," "disinformation," and "copyright infringement" are essential in highlighting the broader context of this issue.

Debunking the Mystery – The Verdict on the BBC Agatha Christie Deepfake

Based on the available evidence and analysis, at this point, a definitive conclusion regarding whether the BBC created a deepfake of Agatha Christie remains elusive. While the circulating video exhibits certain characteristics consistent with deepfake technology, conclusive proof of BBC involvement is currently lacking. The absence of a statement from the BBC further complicates the situation. The key takeaway is the increasing sophistication of deepfake technology and the importance of developing effective detection methods and media literacy.

Learn more about identifying deepfakes and protecting yourself from misinformation. Stay vigilant against BBC deepfakes and other forms of media manipulation. The fight against misinformation requires critical thinking and a healthy skepticism toward online content. The potential misuse of AI deepfake technology highlights the need for improved media literacy and more robust detection methods.

Featured Posts

-

All Solutions For Nyt Crossword April 25 2025

May 20, 2025

All Solutions For Nyt Crossword April 25 2025

May 20, 2025 -

Solo Travel Is It Right For You A Practical Guide

May 20, 2025

Solo Travel Is It Right For You A Practical Guide

May 20, 2025 -

Aryna Sabalenkas Successful Madrid Open Debut

May 20, 2025

Aryna Sabalenkas Successful Madrid Open Debut

May 20, 2025 -

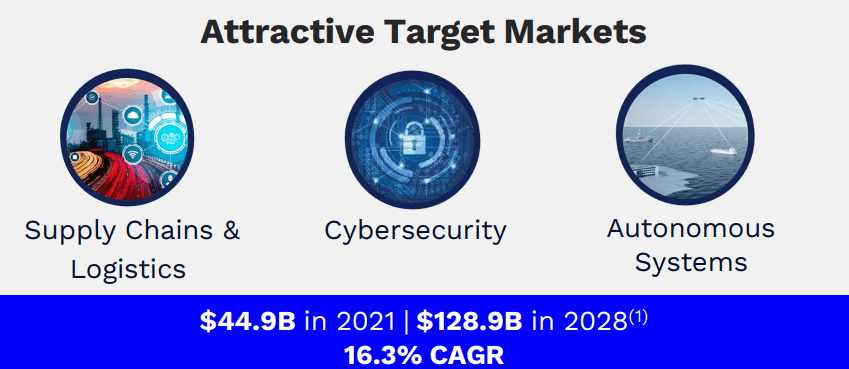

Gross Law Firm Representing Investors In Big Bear Ai Bbai Stock

May 20, 2025

Gross Law Firm Representing Investors In Big Bear Ai Bbai Stock

May 20, 2025 -

The Trump Era Ai Bill Short Term Gains Long Term Uncertainties For Ai Companies

May 20, 2025

The Trump Era Ai Bill Short Term Gains Long Term Uncertainties For Ai Companies

May 20, 2025