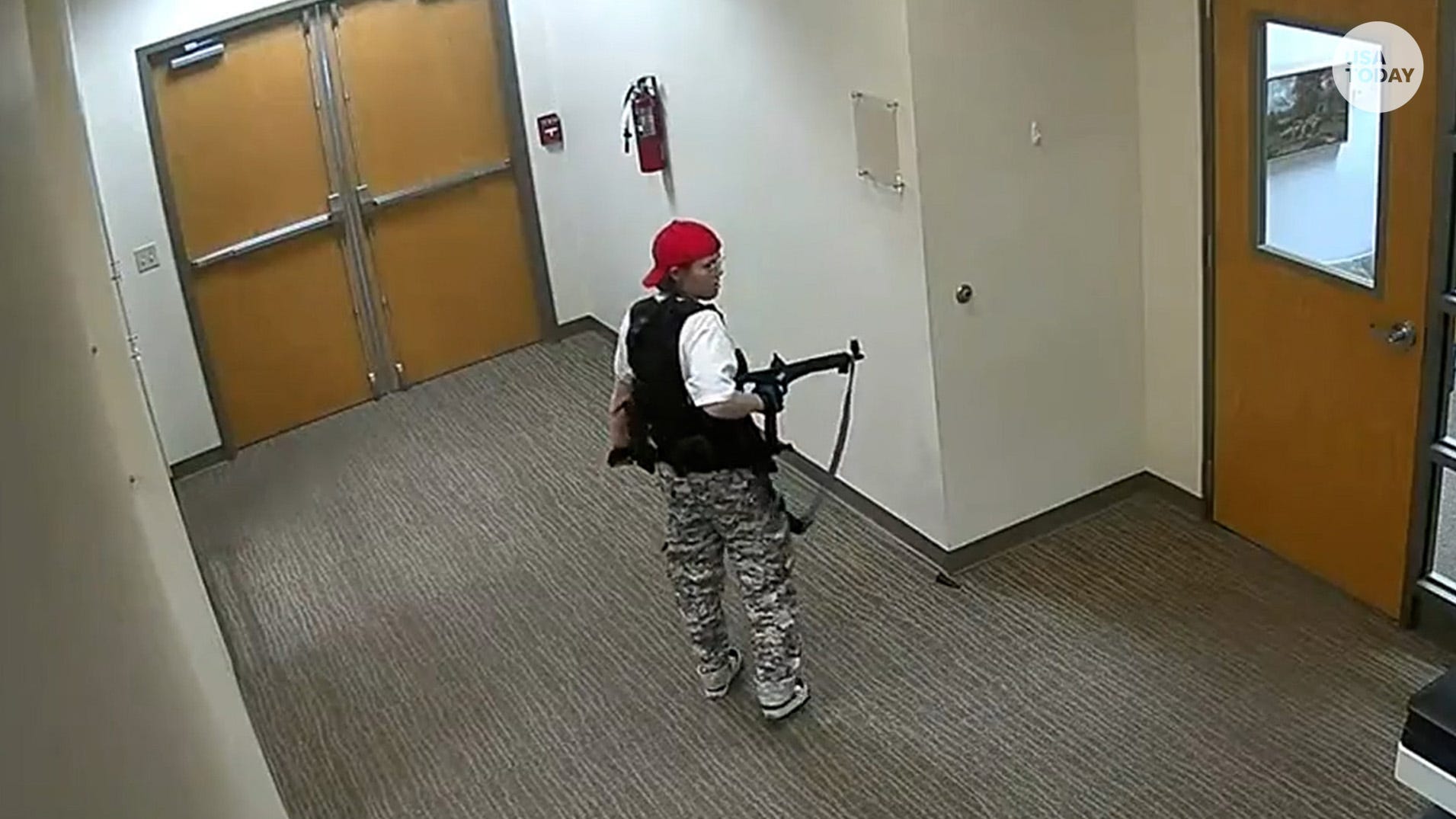

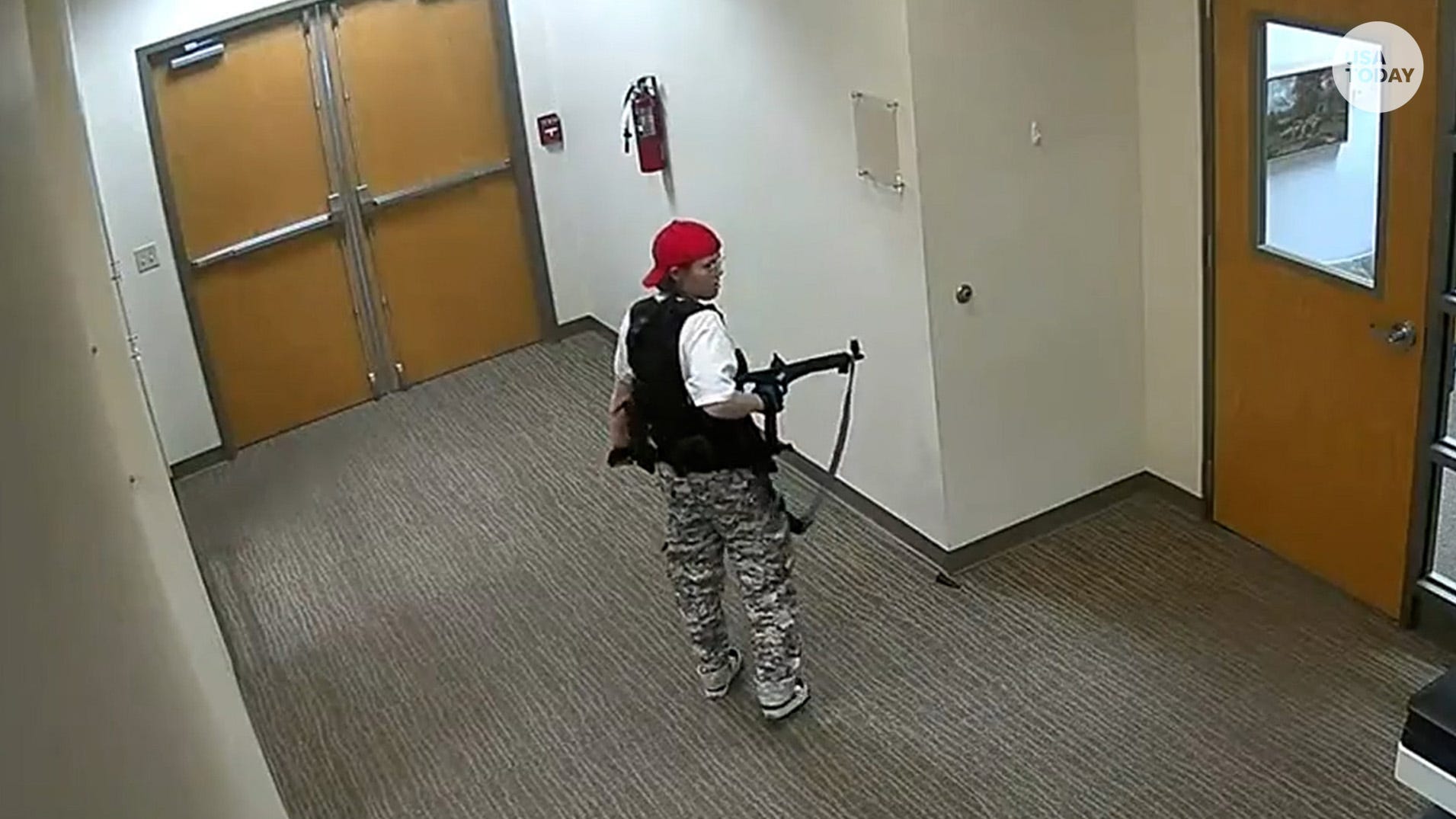

Do Algorithms Contribute To Mass Shooter Radicalization? Holding Tech Companies Accountable

Table of Contents

- The Role of Algorithmic Amplification in Radicalization

- Echo Chambers and Filter Bubbles

- Targeted Advertising and the Spread of Extremist Ideology

- Online Communities and the Facilitating Role of Algorithms

- The Lack of Transparency and Accountability in Algorithm Design

- The "Black Box" Problem

- Regulatory Gaps and the Need for Oversight

- The Responsibility of Tech Companies

- Strategies for Mitigation and Prevention

- Improving Algorithm Design

- Enhanced Content Moderation

- Media Literacy and Critical Thinking

- Conclusion

The Role of Algorithmic Amplification in Radicalization

Algorithms, the invisible engines driving social media, play a surprisingly potent role in shaping online experiences and potentially influencing the radicalization of individuals.

Echo Chambers and Filter Bubbles

Algorithms personalize content feeds, creating "echo chambers" where users are primarily exposed to information confirming their existing beliefs. This reinforces pre-existing biases and can lead individuals down a rabbit hole of increasingly extreme viewpoints. The more time spent within these echo chambers, the greater the risk of radicalization.

- Examples of algorithms promoting extremist content: Studies have shown that YouTube's recommendation system, for example, has been linked to the spread of extremist videos, leading users from relatively benign content to increasingly violent and hateful material.

- Impact of personalized recommendations on radicalization: Personalized recommendations, while seemingly innocuous, can subtly steer users towards extremist groups and ideologies, shaping their worldview without their conscious awareness.

- Studies demonstrating the correlation between algorithm use and extremist views: Research consistently demonstrates a correlation between increased social media usage, particularly through algorithmically curated feeds, and the adoption of extremist views.

Targeted Advertising and the Spread of Extremist Ideology

Targeted advertising, a cornerstone of the social media business model, presents another crucial vector for the spread of extremist propaganda. Algorithms identify vulnerable individuals based on their online behavior and deliver tailored ads promoting extremist ideologies, even if those individuals haven't actively searched for such content.

- Examples of extremist groups using targeted advertising: Extremist groups have successfully used targeted advertising to reach potential recruits, tailoring their messaging to resonate with specific demographics and psychological profiles.

- Difficulty in identifying and removing such ads: The sophisticated nature of targeted advertising makes it incredibly challenging for platforms to identify and remove extremist ads effectively, often leading to a cat-and-mouse game between platforms and those seeking to exploit them.

- Ethical responsibilities of tech companies regarding ad targeting: Tech companies bear a significant ethical responsibility to prevent their advertising platforms from being used to spread hateful and violent ideologies.

Online Communities and the Facilitating Role of Algorithms

Algorithms actively facilitate the creation and growth of online communities dedicated to extremist ideologies. These online spaces serve as breeding grounds for recruitment, planning, and the normalization of violence. Algorithms ensure that like-minded individuals find each other and reinforce each other's extremist beliefs.

- Examples of online forums and groups facilitating radicalization: Numerous online forums and groups provide safe spaces for individuals to share extremist views, recruit new members, and plan acts of violence. These spaces often operate with minimal to no moderation.

- Challenges of moderating these online spaces: The sheer volume of content and the constantly evolving tactics used by extremist groups make effective moderation extremely difficult.

- Responsibility of platform moderators and AI in detecting harmful content: Platforms need to invest significantly in both human moderators and advanced AI systems capable of identifying and removing harmful content proactively.

The Lack of Transparency and Accountability in Algorithm Design

The lack of transparency surrounding algorithm design significantly hinders efforts to understand and mitigate their contribution to radicalization.

The "Black Box" Problem

The opacity surrounding how algorithms function, often referred to as the "black box" problem, makes it challenging to determine precisely how they contribute to the spread of extremist views. This lack of transparency prevents effective scrutiny and accountability.

- Arguments for increased transparency in algorithm design: Increased transparency would allow researchers, policymakers, and the public to better understand the potential risks associated with algorithmic design and develop more effective mitigation strategies.

- Benefits of open-source algorithms: Open-source algorithms, while potentially posing security risks, could enhance transparency and allow for independent audits and analysis.

- Difficulties in balancing transparency with proprietary interests: Tech companies often resist transparency due to concerns about protecting their intellectual property and competitive advantage.

Regulatory Gaps and the Need for Oversight

Current regulations are insufficient to address the challenges posed by algorithmic radicalization. Stronger regulations are needed to hold tech companies accountable for the impact of their algorithms.

- Examples of existing regulations and their limitations: Existing laws often struggle to keep pace with the rapid evolution of technology and frequently lack the specificity needed to address the nuances of algorithmic bias and content moderation.

- Suggestions for improved legislation: Legislation should mandate greater transparency in algorithm design, establish clear responsibilities for content moderation, and provide mechanisms for holding platforms accountable for harmful content.

- Potential impact of government intervention on freedom of speech: Balancing the need for regulation with the protection of freedom of speech is a complex challenge that requires careful consideration.

The Responsibility of Tech Companies

Tech companies bear a significant moral and ethical responsibility to address the issue of algorithmic radicalization. They must proactively implement measures to prevent their platforms from being used to promote violence and extremism.

- Examples of company initiatives to combat extremism: While some companies have launched initiatives to combat extremism, these efforts are often insufficient and reactive rather than proactive.

- Suggestions for improved content moderation strategies: Improvements include more robust AI-powered content detection systems, increased human review of flagged content, and a stronger focus on preventing the spread of extremist narratives.

- Need for proactive measures to prevent radicalization: Tech companies should prioritize proactive measures to prevent radicalization, such as identifying and disrupting extremist networks before they can gain traction.

Strategies for Mitigation and Prevention

Addressing the issue of algorithmic radicalization requires a multi-faceted approach focusing on algorithm design, content moderation, and media literacy.

Improving Algorithm Design

Algorithms can be designed to minimize the risk of radicalization by promoting diverse viewpoints and reducing the amplification of extremist content.

- Examples of alternative algorithm designs: Alternative algorithm designs could prioritize content from diverse sources, reduce the weight given to engagement metrics that amplify extremist content, and incorporate techniques to detect and flag harmful material.

- Role of AI in content moderation: AI can play a crucial role in identifying and removing extremist content, but it must be carefully designed and deployed to avoid bias and unintended consequences.

- Importance of human oversight in algorithm development: Human oversight is essential to ensure that algorithms are developed and deployed responsibly and ethically.

Enhanced Content Moderation

Improving content moderation practices is crucial to remove extremist content effectively. This requires a combination of technological solutions and human oversight.

- Examples of effective content moderation techniques: Effective techniques include using AI to detect hate speech and violent content, employing human moderators to review flagged content, and establishing clear community guidelines.

- Challenges of identifying and removing harmful content: Identifying and removing harmful content is a constant challenge due to the sheer volume of content generated online, the evolving tactics used by extremist groups, and the potential for censorship concerns.

- Need for increased human review: While AI can play a valuable role, human review is essential to ensure accuracy and fairness in content moderation.

Media Literacy and Critical Thinking

Empowering individuals with media literacy skills and critical thinking abilities is vital to help them identify and resist extremist propaganda online.

- Examples of media literacy programs: Media literacy programs can educate users about the tactics used by extremist groups to spread their messages and provide strategies for evaluating the credibility of online information.

- Strategies for teaching critical thinking online: Teaching critical thinking online involves fostering skills such as source evaluation, fact-checking, and identifying bias.

- Importance of community involvement in combating misinformation: Community involvement is critical in combating misinformation, as it allows for shared understanding, collective action, and sustained efforts.

Conclusion

In conclusion, while not solely responsible, algorithms significantly contribute to mass shooter radicalization by creating echo chambers, promoting extremist content, and fostering online communities that normalize violence. This necessitates a comprehensive strategy addressing algorithm design, content moderation, and media literacy. The key takeaway is the urgent need for increased transparency and accountability from tech companies, alongside stronger regulations and proactive measures to prevent algorithmic radicalization. We must demand greater accountability from tech companies and support initiatives aimed at preventing algorithmic radicalization by contacting lawmakers, supporting media literacy programs, and engaging in further research on the topic of "Algorithms and Mass Shooter Radicalization." The fight against mass violence requires a concerted effort to address the complex interplay between technology and extremism.

Tomorrow Is A New Day Insights From Molly Jongs Publishers Weekly Interview

Tomorrow Is A New Day Insights From Molly Jongs Publishers Weekly Interview

Thompsons Monte Carlo Misfortune A Tough Battle

Thompsons Monte Carlo Misfortune A Tough Battle

Wang Suns Table Tennis Dominance Continues Third Straight Mixed Doubles World Championship

Wang Suns Table Tennis Dominance Continues Third Straight Mixed Doubles World Championship

The Future Of Tesla And Space X Elon Musks Crossroads

The Future Of Tesla And Space X Elon Musks Crossroads

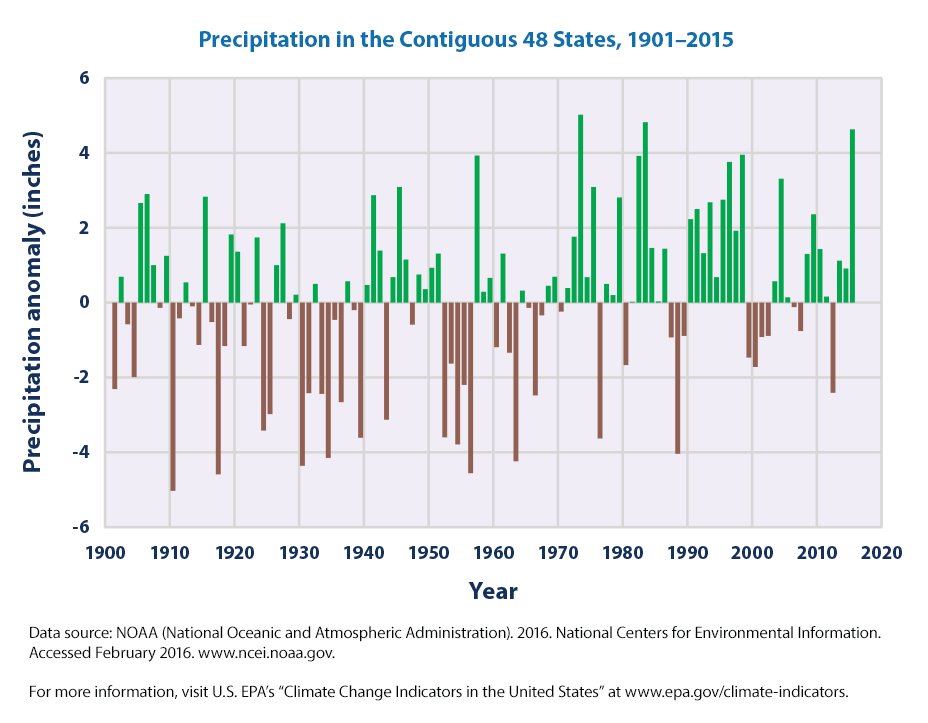

Western Massachusetts Rainfall Trends The Role Of Climate Change

Western Massachusetts Rainfall Trends The Role Of Climate Change