Is AI Therapy A Surveillance Tool In A Modern Police State?

Table of Contents

Data Security and Privacy Concerns in AI Therapy

The integration of AI into mental healthcare necessitates the digital storage and processing of highly sensitive personal data. This creates a significant vulnerability to various threats, raising serious concerns about data security and privacy.

Data Breaches and Unauthorized Access: The digital nature of AI therapy platforms makes them susceptible to cyberattacks and data breaches. The consequences of such breaches can be devastating, leading to the exposure of deeply personal and confidential information, including diagnoses, treatment plans, and personal details.

- Examples of past data breaches in healthcare: Numerous high-profile healthcare data breaches have demonstrated the vulnerability of sensitive patient information. These incidents highlight the potential for significant harm when such data falls into the wrong hands.

- Potential for hackers to exploit AI therapy platforms: AI therapy platforms, like any digital system, are vulnerable to hacking and malware attacks. Sophisticated hackers could exploit security weaknesses to access and potentially misuse sensitive mental health data.

- Lack of robust security measures in some systems: Not all AI therapy platforms employ robust security measures, leaving sensitive data exposed to potential breaches. A lack of standardized security protocols across the industry exacerbates this risk.

Data Retention Policies and Informed Consent: The ethical implications of data retention policies in AI therapy are significant. The length of time data is stored, its potential uses, and the clarity of informed consent processes are all crucial considerations.

- Concerns regarding long-term data storage: The indefinite storage of sensitive mental health data raises concerns about potential misuse. Data that is collected for therapeutic purposes might be repurposed for other uses, potentially violating patient privacy.

- Potential for misuse of anonymized data: Even anonymized data can be re-identified, creating a risk of privacy violation. Sophisticated techniques can sometimes overcome anonymization, allowing for the re-identification of individuals.

- The need for transparent consent procedures: Patients must be fully informed about how their data will be used and stored before consenting to AI therapy. Consent forms need to be clear, concise, and easily understood.

- Challenges in obtaining truly informed consent from vulnerable populations: Individuals with severe mental health conditions might not have the capacity to give truly informed consent. Special considerations are needed to protect this vulnerable population.

Cross-Border Data Transfers and International Regulations: The increasing globalization of AI therapy raises concerns about cross-border data transfers and the lack of comprehensive international regulations.

- Data sovereignty issues: Different countries have varying data protection laws. The transfer of data across borders raises complex legal questions about which jurisdiction's laws apply.

- The difficulty in enforcing data protection laws across jurisdictions: Enforcing data protection laws across international borders can be challenging, especially when different countries have conflicting regulations.

- The lack of harmonization in international data privacy standards: The absence of universally agreed-upon data privacy standards creates uncertainty and makes it difficult to ensure consistent protection of patient data.

Algorithmic Bias and its Impact on Vulnerable Populations

AI algorithms are trained on data, and if that data reflects existing societal biases, the algorithm will likely perpetuate and even amplify those biases. This is particularly concerning in the context of mental healthcare.

Bias in AI Algorithms: AI algorithms used in mental healthcare can exhibit biases related to race, gender, socioeconomic status, and other factors. These biases can lead to discriminatory outcomes, such as misdiagnosis, inappropriate treatment recommendations, and unequal access to care.

- Examples of bias in AI systems: Studies have shown that AI algorithms used in various fields, including healthcare, have demonstrated biases against certain demographic groups.

- The potential for AI therapy to perpetuate existing societal biases: If the data used to train AI therapy algorithms reflects existing societal biases, the algorithm will likely perpetuate these biases in its diagnoses and recommendations.

- The importance of algorithm transparency and accountability: To mitigate bias, it is crucial to have transparent and accountable AI algorithms. The processes used to develop and deploy these algorithms should be carefully scrutinized.

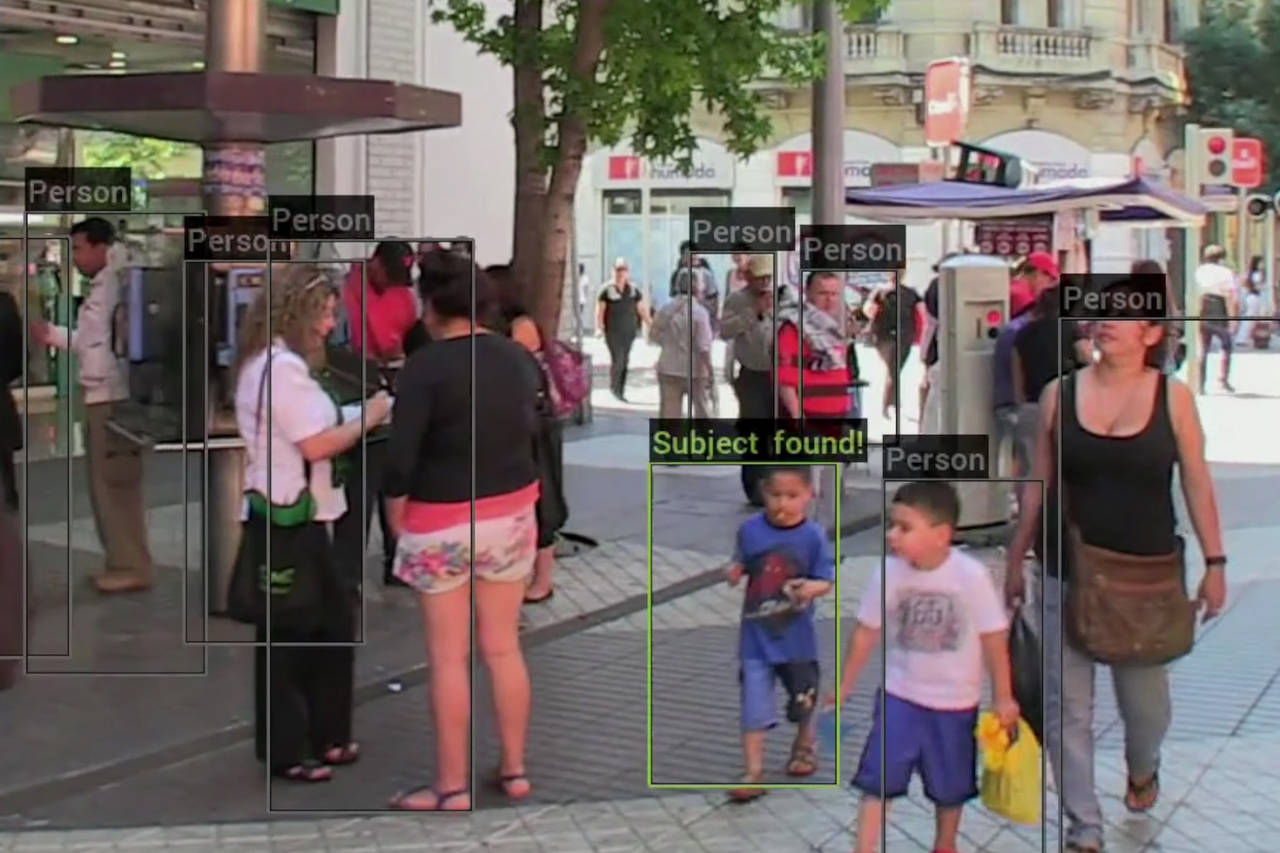

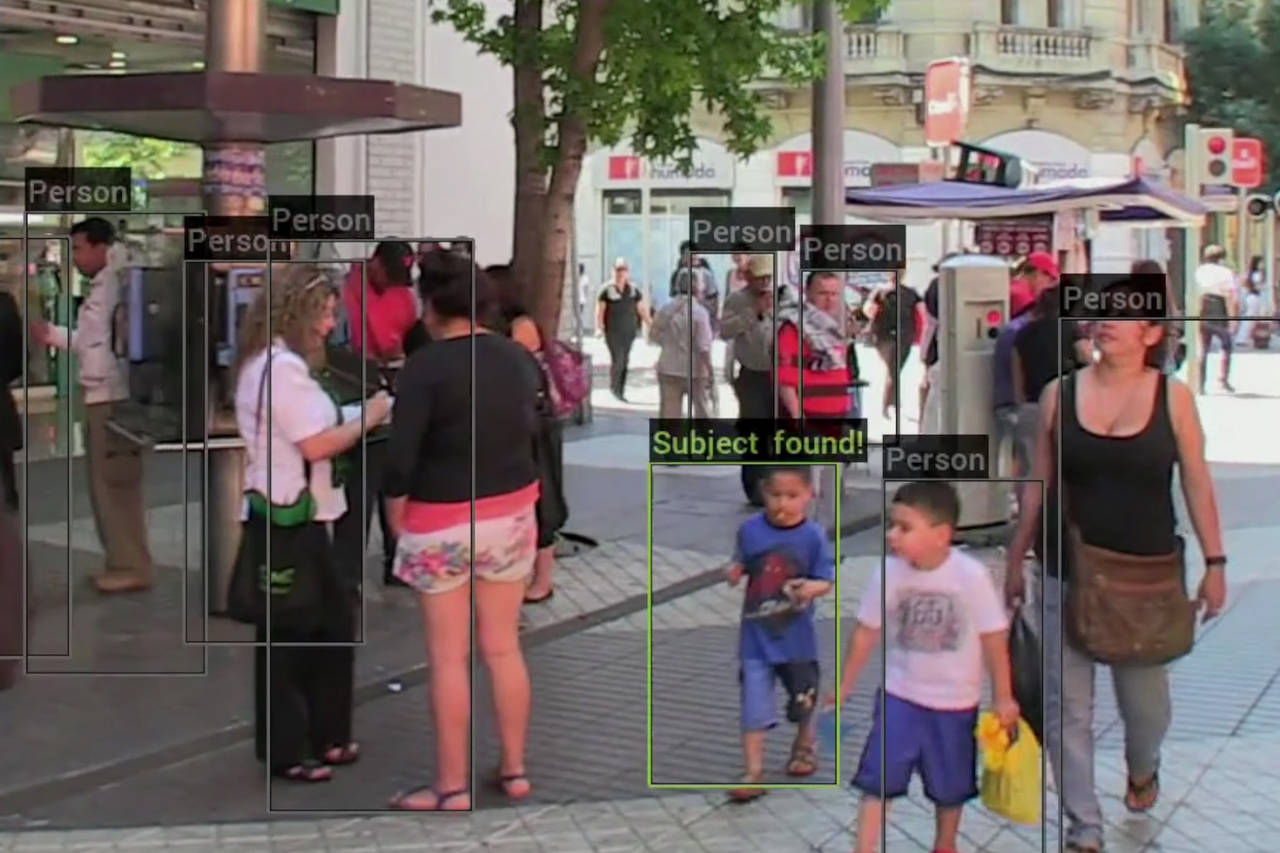

Psychological Profiling and Predictive Policing: There is a significant risk that data collected through AI therapy could be misused for psychological profiling and predictive policing.

- The use of AI to predict future behavior: AI algorithms could be used to analyze patient data to predict future behavior, potentially leading to preemptive interventions or even discriminatory actions.

- The risks of false positives and wrongful accusations: Predictive policing based on AI analysis carries a high risk of false positives, leading to wrongful accusations and the stigmatization of individuals.

- The potential for stigmatization and discrimination: The use of AI therapy data for predictive policing could further stigmatize individuals with mental health conditions and lead to discrimination.

Lack of Diversity and Representation in AI Development: The underrepresentation of diverse populations in the development of AI algorithms contributes to biased outcomes. Algorithms trained on datasets that lack diversity are more likely to produce biased results.

- The need for diverse teams in AI development: Diverse teams of developers are essential to ensure that AI algorithms are designed and tested with various perspectives and experiences in mind.

- The importance of testing algorithms on diverse datasets: Testing algorithms on diverse datasets is crucial to identify and mitigate biases.

- The limitations of current data collection practices: Current data collection practices often lack diversity, hindering the development of unbiased AI algorithms.

The Erosion of Trust and the Impact on Mental Health Services

The use of AI in therapy raises serious concerns about the erosion of trust in mental health services.

Diminished Client-Therapist Confidentiality: The use of AI in therapy could compromise the fundamental principle of client-therapist confidentiality.

- Concerns about data sharing with third parties: Data collected through AI therapy might be shared with third parties, such as insurance companies or law enforcement agencies, without the client's knowledge or consent.

- The potential for AI therapy to become a tool for surveillance: AI therapy could be misused as a tool for surveillance, undermining the trust and open communication necessary for effective therapy.

- The impact on client willingness to seek help: Concerns about data privacy and potential misuse could deter individuals from seeking mental health services.

The Dehumanization of Mental Healthcare: Over-reliance on AI in therapy could lead to a more impersonal and less empathetic approach to mental healthcare.

- The limitations of AI in providing human connection and empathy: AI lacks the human connection and empathy that are essential components of effective therapy.

- The importance of the therapeutic relationship: The therapeutic relationship is built on trust and a sense of connection between the client and therapist. AI cannot fully replicate this relationship.

- The risks of over-reliance on technology: Over-reliance on technology in mental healthcare could lead to a decline in the quality of care and a diminished focus on the human element.

Access to Justice and Legal Recourse: Individuals harmed by biased or malfunctioning AI systems in mental healthcare may face significant challenges in accessing justice and legal recourse.

- The challenges in proving algorithmic bias: Proving that an AI algorithm is biased and has caused harm can be extremely difficult.

- The need for stronger legal frameworks: Stronger legal frameworks are needed to address the unique challenges posed by AI in mental healthcare and provide avenues for legal recourse.

- The importance of holding developers and users accountable: Developers and users of AI systems in mental healthcare must be held accountable for any harm caused by their products or actions.

Conclusion:

AI therapy offers significant potential benefits, but its deployment must be guided by strong ethical principles and robust regulations. The potential for misuse as a surveillance tool in a modern police state is a real and concerning possibility. Algorithmic bias, data breaches, and the erosion of trust in mental healthcare services represent significant risks that demand urgent attention. We must prioritize data security, promote algorithm transparency, and ensure diverse representation in AI development to mitigate these risks. We need to advocate for robust data protection regulations, including strong informed consent procedures and strict limitations on data retention and sharing. Transparency in algorithmic design and rigorous testing for bias are paramount. Let's work together to ensure AI therapy is used ethically and responsibly, preventing its potential misuse and protecting individual rights. Join the conversation on responsible AI therapy and advocate for ethical practices in mental healthcare.

Featured Posts

-

Paddy Pimblett Ufc 314 Champion Goat Legends Backing

May 16, 2025

Paddy Pimblett Ufc 314 Champion Goat Legends Backing

May 16, 2025 -

Trump And Oil Prices Goldman Sachs Findings From Social Media Scrutiny

May 16, 2025

Trump And Oil Prices Goldman Sachs Findings From Social Media Scrutiny

May 16, 2025 -

Rookie Chandler Simpsons Three Hit Game Leads Rays To Sweep Padres

May 16, 2025

Rookie Chandler Simpsons Three Hit Game Leads Rays To Sweep Padres

May 16, 2025 -

Everton Vina Vs Coquimbo Unido 0 0 Resumen Resultado Y Goles

May 16, 2025

Everton Vina Vs Coquimbo Unido 0 0 Resumen Resultado Y Goles

May 16, 2025 -

Vont Weekend 2025 Highlights From 104 5 The Cat 4 4 4 6

May 16, 2025

Vont Weekend 2025 Highlights From 104 5 The Cat 4 4 4 6

May 16, 2025