The Limits Of AI Learning: Promoting Responsible AI Development And Deployment

Table of Contents

Data Bias and its Impact on AI Systems

The foundation of any AI system is its training data. If this data reflects existing societal biases, the resulting AI will inevitably perpetuate and even amplify these inequalities. This is the core problem of biased datasets.

The Problem of Biased Datasets

AI models learn patterns from the data they are fed. If that data is skewed—for example, overrepresenting one demographic group or underrepresenting others—the AI will learn and reproduce these biases in its outputs.

- Examples: Facial recognition systems have shown significant biases against people of color, leading to misidentification and inaccurate results. Similarly, algorithmic bias in loan applications has resulted in unfair denials for certain demographic groups.

- Consequences: Biased AI systems can lead to discriminatory outcomes in critical areas like criminal justice, healthcare, and employment, exacerbating existing social inequalities.

Mitigating Bias Through Algorithmic Transparency

Addressing bias requires a multi-pronged approach, including algorithmic transparency. Understanding how an AI system arrives at its conclusions is essential for identifying and correcting biases.

- Techniques: Explainable AI (XAI) techniques aim to make AI decision-making more understandable. Regular audits of algorithms for bias are also crucial. Establishing clear ethical guidelines for algorithm design can help prevent bias from the outset.

- Challenges: Balancing transparency with the need to protect proprietary information presents a significant challenge. The inherent complexity of many AI algorithms also makes interpretation difficult.

The Limitations of Current AI Architectures

Beyond data bias, the very architecture of many current AI systems presents significant limitations.

The "Black Box" Problem and Lack of Explainability

Many sophisticated AI models, particularly deep learning systems, function as "black boxes." Their decision-making processes are opaque, making it difficult to understand how they arrive at their conclusions.

- Implications: This lack of explainability makes it difficult to identify errors, debug flawed systems, and build trust in AI's decisions. If an AI system makes a critical error, understanding why it made that error is vital for fixing the problem and preventing future occurrences.

- Solutions: Advancements in XAI are crucial. Focusing on more interpretable models and developing algorithms that are inherently more transparent are key steps forward.

Generalization and Robustness Challenges

AI models often struggle to generalize their learned knowledge to new, unseen data. They may perform well on the data they were trained on but fail dramatically when faced with unexpected situations.

- Examples: Self-driving cars may perform flawlessly in controlled environments but struggle with unpredictable weather conditions or unexpected obstacles. Medical diagnosis systems might accurately identify common diseases but misinterpret rare conditions.

- Solutions: Improved model training techniques, such as transfer learning and data augmentation, can improve generalization. More rigorous testing in diverse and challenging environments is essential. Incorporating uncertainty quantification into AI systems can help account for the limitations of their knowledge.

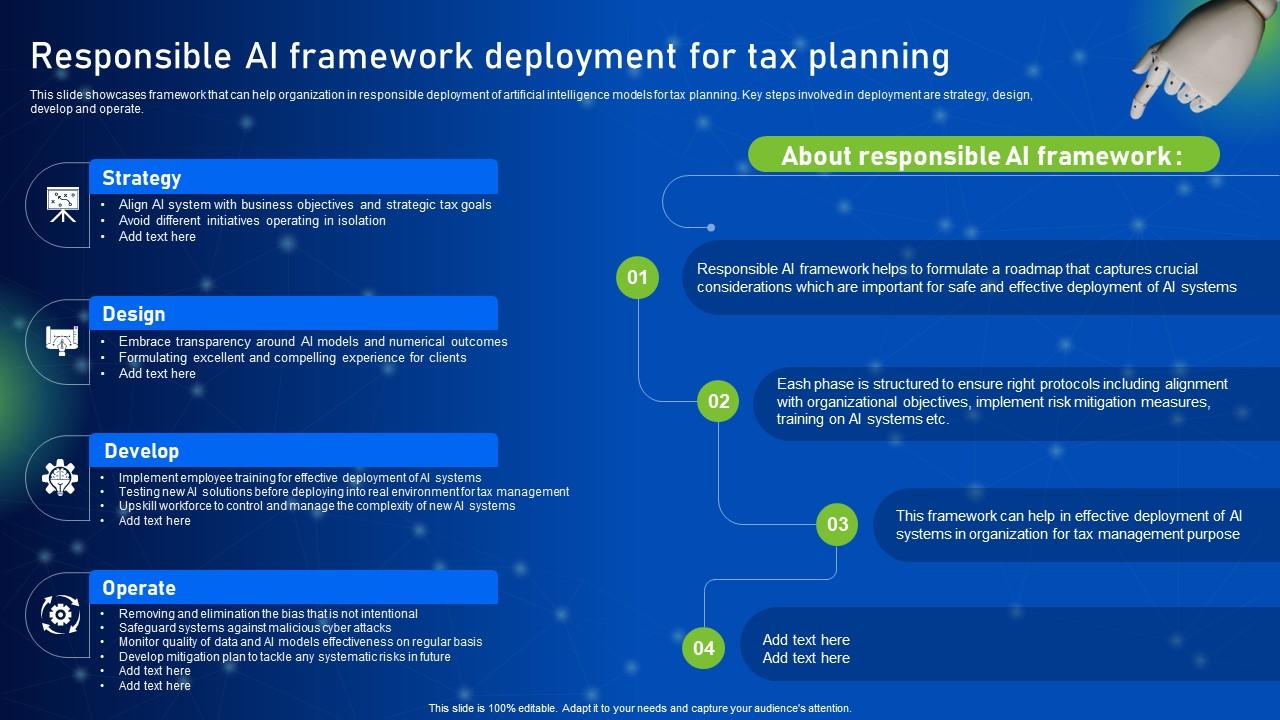

Ethical Considerations in AI Development and Deployment

The development and deployment of AI raise significant ethical questions that demand careful consideration.

Accountability and Responsibility for AI Decisions

Establishing clear lines of responsibility when AI systems make decisions with significant consequences is critical.

- Challenges: Determining liability in cases of AI-caused harm is complex. Regulatory frameworks for AI are still in their nascent stages.

- Solutions: Developing comprehensive ethical guidelines for AI development and deployment is essential. Robust oversight mechanisms are needed to ensure responsible use. Promoting responsible innovation within the AI field should be a priority.

Job Displacement and Societal Impact of AI

AI-driven automation has the potential to cause significant job displacement across various sectors. Proactive measures are needed to mitigate this impact.

- Solutions: Investing in retraining and upskilling programs is crucial to prepare workers for the changing job market. Exploring alternative economic models, such as universal basic income, may also be necessary. Focusing on human-AI collaboration rather than complete replacement can help to leverage the benefits of AI while minimizing negative consequences.

Conclusion

The limits of AI learning are multifaceted, encompassing data bias, architectural constraints, and significant ethical concerns. Addressing these challenges requires a concerted effort from researchers, developers, policymakers, and the public. Ignoring these limitations risks exacerbating existing inequalities and creating new societal problems. We must prioritize responsible AI development and deployment, ensuring that this powerful technology serves humanity's best interests. Let's engage in open dialogue, promote further research, and collaborate to mitigate the risks associated with unconstrained AI learning, ensuring a future where AI enhances rather than hinders human well-being. The future of responsible AI depends on our collective commitment to addressing the limits of AI learning and fostering ethical innovation.

Featured Posts

-

Foire Au Jambon 2025 Le Maire De Bayonne S Interroge Sur Les Frais D Organisation Exorbitants

May 31, 2025

Foire Au Jambon 2025 Le Maire De Bayonne S Interroge Sur Les Frais D Organisation Exorbitants

May 31, 2025 -

Spanish Inflation Unexpectedly Cools Supporting Ecb Rate Cut

May 31, 2025

Spanish Inflation Unexpectedly Cools Supporting Ecb Rate Cut

May 31, 2025 -

Everything You Need To Know About The Emerging Covid 19 Lp 8 1 Variant

May 31, 2025

Everything You Need To Know About The Emerging Covid 19 Lp 8 1 Variant

May 31, 2025 -

New Covid 19 Variant Driving Increased Case Numbers Globally Warns Who

May 31, 2025

New Covid 19 Variant Driving Increased Case Numbers Globally Warns Who

May 31, 2025 -

Five Dystopian Predictions From Black Mirror That Hit Too Close To Home

May 31, 2025

Five Dystopian Predictions From Black Mirror That Hit Too Close To Home

May 31, 2025