The Reality Of AI Learning: Navigating The Ethical Challenges Of Artificial Intelligence

Table of Contents

Bias and Discrimination in AI Systems

AI systems, powerful as they are, are not immune to the biases present in the data they are trained on. This leads to what's known as algorithmic bias, resulting in discriminatory outcomes that can have far-reaching consequences.

Algorithmic Bias

Algorithmic bias manifests in various ways, often reflecting and amplifying existing societal prejudices.

- Loan applications: AI systems used to assess loan applications might unfairly deny loans to applicants from specific demographic groups due to biased historical data.

- Facial recognition: Facial recognition systems have demonstrated inaccuracies and biases, particularly affecting individuals with darker skin tones.

- Criminal justice: AI tools used in predicting recidivism can perpetuate existing biases within the criminal justice system, leading to unfair sentencing and profiling.

Creating diverse and representative datasets is crucial for mitigating algorithmic bias. This means actively seeking out and including data from underrepresented groups. Furthermore, employing fairness-aware algorithms, which are designed to identify and correct for bias, is becoming increasingly important.

The Impact of Bias on Vulnerable Groups

The impact of AI bias disproportionately affects marginalized communities, exacerbating existing inequalities.

- Racial bias: AI systems can perpetuate racial stereotypes, leading to unfair treatment in areas like hiring, housing, and law enforcement.

- Gender bias: AI systems can reinforce gender stereotypes, limiting opportunities for women in various fields.

- Bias against people with disabilities: AI systems may not be accessible or inclusive for people with disabilities, further marginalizing them.

Accountability and transparency are essential to address this issue. We need mechanisms to identify and rectify bias in AI systems and hold developers accountable for the impact of their creations.

Privacy and Data Security in the Age of AI

The rise of AI is inextricably linked to the collection and analysis of vast amounts of data. This raises significant ethical concerns regarding privacy and data security.

Data Collection and Surveillance

The scale of data collection for AI training raises concerns about potential misuse and the erosion of individual privacy.

- Data breaches: Large datasets are vulnerable to hacking and data breaches, potentially exposing sensitive personal information.

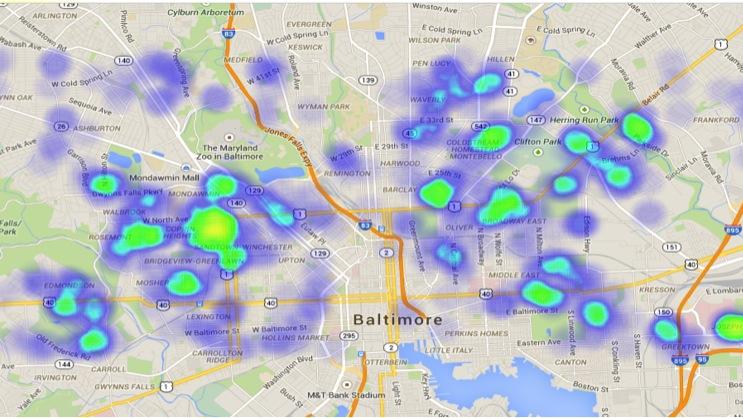

- Unauthorized surveillance: AI-powered surveillance technologies raise concerns about mass surveillance and potential abuses of power.

- Erosion of privacy: The constant collection and analysis of personal data can lead to a gradual erosion of individual privacy.

Data anonymization techniques and obtaining informed user consent are vital steps in protecting individual privacy. Robust security measures are also crucial to prevent data breaches and unauthorized access.

Protecting Sensitive Information

AI developers and users have an ethical responsibility to protect sensitive personal data.

- GDPR and CCPA: Regulations like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) aim to protect individuals' data rights.

- Encryption and data minimization: Employing strong encryption and adhering to the principle of data minimization (collecting only the necessary data) are crucial for safeguarding sensitive information.

Stronger regulations and ethical frameworks are needed to ensure responsible handling of personal data in the age of AI.

Job Displacement and Economic Inequality

The automation potential of AI raises significant concerns about job displacement and the exacerbation of existing economic inequalities.

Automation and the Future of Work

AI-driven automation is expected to displace workers in various sectors.

- Manufacturing: Automation is already transforming manufacturing, reducing the demand for manual labor.

- Transportation: Self-driving vehicles threaten to displace truck drivers, taxi drivers, and other transportation workers.

- Customer service: AI-powered chatbots and virtual assistants are increasingly replacing human customer service representatives.

Reskilling and upskilling initiatives are crucial to help workers adapt to the changing job market and acquire the skills needed for new roles.

Exacerbating Existing Inequalities

AI-driven automation could exacerbate existing economic inequalities.

- Impact on low-skilled workers: Low-skilled workers are disproportionately at risk of job displacement due to automation.

- Developing countries: The impact of automation could be particularly severe in developing countries, potentially widening the gap between developed and developing nations.

Equitable distribution of the benefits of AI, including mechanisms for social safety nets and support for displaced workers, is essential to mitigate the negative impacts of automation.

Responsibility and Accountability in AI Development

The complexity of many AI systems presents significant challenges in understanding their decision-making processes, raising critical questions about responsibility and accountability.

The Problem of Explainability

Many AI systems operate as "black boxes," making it difficult to understand how they arrive at their conclusions.

- Explainable AI (XAI): The development of explainable AI (XAI) is crucial to increase transparency and accountability.

- Understanding decision-making: Understanding the reasoning behind AI decisions is essential for identifying and correcting biases and errors.

Without explainability, holding AI systems and their developers accountable for their actions becomes extremely difficult.

Establishing Ethical Frameworks and Guidelines

Clear ethical guidelines and regulations are necessary to govern AI development and deployment.

- Asilomar AI Principles: Existing frameworks like the Asilomar AI Principles offer a starting point for ethical AI development.

- Collaboration: Governments, industry, and civil society need to collaborate to establish robust ethical standards.

Developing and enforcing ethical frameworks is a collective responsibility, crucial for ensuring that AI benefits all of humanity.

Conclusion

The ethical challenges of artificial intelligence – bias, privacy violations, job displacement, and the lack of accountability – are significant and demand immediate attention. Addressing these challenges is paramount to ensuring that AI is developed and deployed responsibly, benefiting all members of society. Understanding the ethical challenges of artificial intelligence is crucial for shaping a future where AI benefits all of humanity. Let's work together to navigate these complex issues and build a more equitable and responsible future powered by AI.

Featured Posts

-

Minnesota Air Quality Crisis Impact Of Canadian Wildfires

May 31, 2025

Minnesota Air Quality Crisis Impact Of Canadian Wildfires

May 31, 2025 -

Indian Wells Masters Runes Decisive Win Against Tsitsipas

May 31, 2025

Indian Wells Masters Runes Decisive Win Against Tsitsipas

May 31, 2025 -

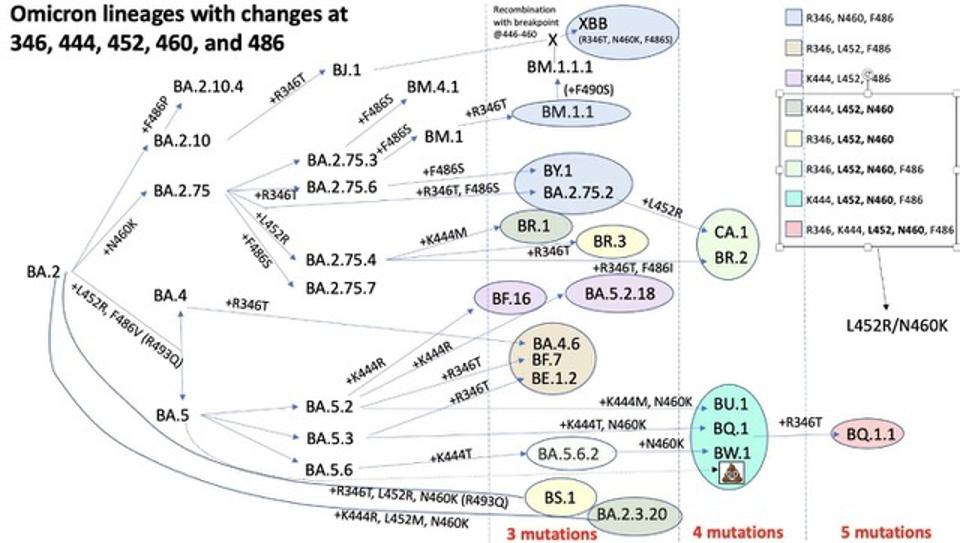

Who New Covid 19 Variant Fueling Case Increases Globally

May 31, 2025

Who New Covid 19 Variant Fueling Case Increases Globally

May 31, 2025 -

Investigating Houstons Drug Addicted Rat Population

May 31, 2025

Investigating Houstons Drug Addicted Rat Population

May 31, 2025 -

Guelsen Bubikoglu Nun Son Hali Yesilcam Guezeli Hayranlarini Sasirtti Mine Tugay Dan Da Tepki Geldi

May 31, 2025

Guelsen Bubikoglu Nun Son Hali Yesilcam Guezeli Hayranlarini Sasirtti Mine Tugay Dan Da Tepki Geldi

May 31, 2025