The Surveillance State And AI Therapy: A Critical Examination

Table of Contents

Our aim is to analyze the key challenges and opportunities presented by the use of AI in mental healthcare, focusing on data privacy, algorithmic bias, therapeutic efficacy, and the urgent need for robust regulatory frameworks. The intersection of AI therapy and increased surveillance necessitates a careful and nuanced discussion.

Data Privacy Concerns in AI-Powered Mental Healthcare

The use of AI in mental healthcare involves collecting sensitive personal information. This data, crucial for personalized AI therapy, presents significant privacy risks.

The Nature of Data Collected

AI therapy platforms often collect a wide range of sensitive personal information, including:

- Detailed personal histories, spanning childhood experiences to current relationships.

- Mental health diagnoses and treatment histories.

- Recordings of therapy sessions, potentially including highly personal disclosures.

- Biometric data, such as voice patterns and typing speed, which might inadvertently reveal emotional states.

This level of intimate detail necessitates stringent data protection measures.

- Risks of data breaches and unauthorized access: Cyberattacks targeting AI therapy platforms could expose highly sensitive mental health data, leading to significant harm and reputational damage.

- Potential for data misuse by third parties: Insurance companies or employers could potentially access this data, leading to discrimination or denial of services.

- Lack of clear consent protocols: Many AI therapy applications lack clear and easily understandable consent protocols, leaving users unaware of how their data is being collected, used, and protected.

- The challenges of anonymizing sensitive mental health data: Completely anonymizing this type of data is exceptionally difficult, making it vulnerable to re-identification.

Algorithmic Bias and its Impact on AI Therapy

The algorithms powering AI therapy are trained on vast datasets, and biases present in these datasets can lead to significant inequalities. This represents a major challenge for equitable access to AI therapy.

Bias in Training Data

If the training data predominantly reflects the experiences of one demographic group (e.g., wealthy, white individuals), the resulting AI therapy may not accurately diagnose or treat individuals from other groups.

- Examples of potential biases: A biased algorithm might misinterpret the symptoms of depression in individuals from minority ethnic backgrounds, leading to inaccurate diagnoses and inappropriate treatment. Gender biases could lead to misdiagnosis or the overlooking of specific symptoms related to gender identity.

- The impact of biased algorithms on diagnosis and treatment recommendations: Biased algorithms can perpetuate and amplify existing health disparities, further marginalizing already vulnerable populations.

- The lack of diversity in AI development teams: A lack of diversity in the teams developing these algorithms contributes to a lack of awareness of potential biases.

Addressing algorithmic bias requires carefully curated datasets that reflect the diversity of the population and the active involvement of diverse experts in the development process.

The Therapeutic Efficacy of AI Therapy

While AI therapy holds significant promise, its actual effectiveness compared to traditional methods remains a subject of ongoing research.

Benefits and Limitations

AI therapy offers several potential benefits:

- Accessibility: AI-powered platforms can provide access to mental healthcare in remote areas or for individuals with mobility limitations.

- Affordability: AI therapy could be more cost-effective than traditional therapy, making mental healthcare more accessible to a wider range of people.

- Personalized treatment plans: AI can analyze individual data to create customized treatment plans tailored to specific needs.

However, limitations also exist:

- Lack of human connection: The absence of genuine human interaction can hinder the therapeutic process, especially for individuals who require strong empathetic support.

- Inability to handle complex emotional situations: AI lacks the nuanced emotional intelligence and critical thinking abilities of a human therapist, making it ill-equipped to handle complex cases.

- Potential for misdiagnosis or inappropriate treatment recommendations: Inaccurate algorithms can lead to incorrect diagnoses and potentially harmful treatment recommendations.

- The importance of human oversight: Human oversight and intervention are crucial to ensure the safety and efficacy of AI-powered therapy.

Regulatory Frameworks and Ethical Considerations

The responsible development and deployment of AI in mental healthcare require strong regulatory frameworks and ethical guidelines.

The Need for Robust Regulations

Clear guidelines are needed to protect patient rights and data privacy.

- Data protection laws and their application to AI therapy: Existing data protection laws need to be updated and applied effectively to cover the unique challenges posed by AI therapy.

- Ethical considerations related to patient autonomy and informed consent: Patients need to be fully informed about how their data is used and have control over their data.

- The role of professional organizations in establishing ethical standards: Professional organizations need to play an active role in setting ethical guidelines and best practices for the use of AI in therapy.

- The need for transparency and accountability in AI therapy systems: AI therapy systems need to be transparent in their decision-making processes and accountable for their outcomes.

Conclusion: Navigating the Complex Landscape of AI Therapy and the Surveillance State

The integration of AI therapy into mental healthcare presents both exciting opportunities and significant challenges. While AI offers the potential for improved access and personalized treatment, the concurrent growth of the surveillance state raises serious concerns about data privacy, algorithmic bias, and the ethical implications of using AI in such a sensitive context. The therapeutic efficacy of AI therapy is still under investigation, and the lack of robust regulatory frameworks adds to the complexity. To realize the potential benefits of AI therapy while mitigating its risks, we need a strong commitment to responsible AI development and implementation, prioritizing data privacy, addressing algorithmic bias, and establishing clear ethical guidelines. Let's engage in a continuing critical discussion about responsible AI therapy, ethical AI therapy, and the critical balance between AI therapy and surveillance to ensure equitable and safe access to mental healthcare for all.

Featured Posts

-

Scrutiny Of Bidens Mental State Warrens Response

May 15, 2025

Scrutiny Of Bidens Mental State Warrens Response

May 15, 2025 -

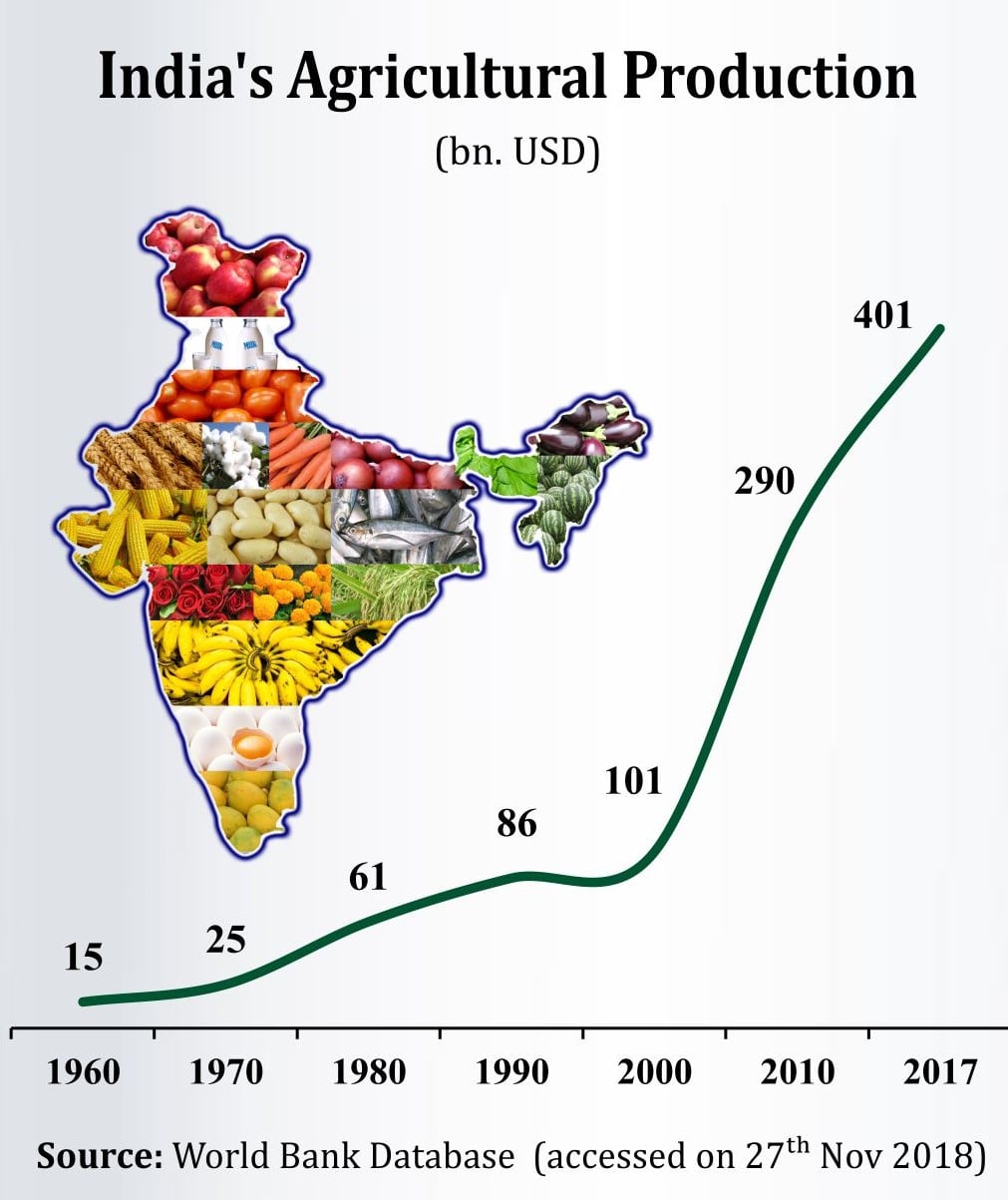

Improved Monsoon Outlook Implications For Indias Agricultural Sector And Consumer Spending

May 15, 2025

Improved Monsoon Outlook Implications For Indias Agricultural Sector And Consumer Spending

May 15, 2025 -

5 The Cat Vont Weekend A Five Picture Summary April 4 6 2025

May 15, 2025

5 The Cat Vont Weekend A Five Picture Summary April 4 6 2025

May 15, 2025 -

Gsw Campus All Clear Following Individual In Custody

May 15, 2025

Gsw Campus All Clear Following Individual In Custody

May 15, 2025 -

Padres Vs Cubs Predicting The Outcome And A Potential Cubs Victory

May 15, 2025

Padres Vs Cubs Predicting The Outcome And A Potential Cubs Victory

May 15, 2025