Why AI Doesn't Learn (and What That Means For You)

Table of Contents

The Illusion of AI Learning

AI vs. Human Learning

The fundamental difference between AI and human learning lies in their underlying mechanisms. Humans learn through experience, adaptation, and a deep understanding of the world around them. We build conceptual models, make inferences, and adapt our behavior based on new information. AI, on the other hand, operates through pattern recognition and statistical analysis of existing data. It doesn't truly understand the information it processes; instead, it identifies patterns and extrapolates based on those patterns.

- Human Learning: Learning to ride a bike involves trial and error, adaptation to different terrains, and an intuitive understanding of balance and coordination.

- AI Learning: An AI system identifying cats in images learns by analyzing thousands of labeled images. It identifies patterns in pixels and shapes that correlate with the label "cat," but it doesn't possess an understanding of what a cat is.

The Role of Data in "AI Learning"

AI's "learning" is heavily reliant on vast datasets for training. This dependence introduces several limitations:

- Data Bias: Biased training data leads to biased outcomes. For example, facial recognition systems trained on predominantly white faces often perform poorly on people with darker skin tones. This highlights the critical "garbage in, garbage out" principle.

- Overfitting and Generalization: AI models can overfit to the training data, performing well on the data they've seen but failing to generalize to new, unseen data. The model becomes too specific to the training set and cannot adapt to variations.

- Data Limitations: AI systems struggle with situations not represented in their training data. They lack the adaptability and creativity to handle novel or unexpected scenarios.

The Limits of Current AI Technology

Lack of Common Sense and Reasoning

AI struggles with tasks requiring common sense reasoning, contextual understanding, and creative problem-solving – capabilities inherent to human intelligence. Current AI systems excel at narrow tasks but lack the broad, general intelligence of humans.

- An AI might mistake a parked car for an obstacle because it lacks the contextual understanding that cars typically remain stationary in designated parking spaces.

- AI systems often fail to understand nuances in language or situations that require common sense inferences.

The "Black Box" Problem

Understanding the decision-making processes of complex AI systems, particularly deep learning models, is incredibly challenging. This "black box" problem makes it difficult to identify errors or biases within the system. Explainable AI (XAI) is a growing field addressing this challenge, aiming to make AI's decision-making processes more transparent and understandable.

- The lack of transparency makes it hard to debug AI systems and ensure accountability for their actions.

- XAI techniques aim to provide insights into the reasoning behind AI's outputs, increasing trust and allowing for better error correction.

What This Means for You

Realistic Expectations of AI

It's crucial to have realistic expectations about the capabilities and limitations of current AI technology. Anthropomorphizing AI – attributing human-like qualities to it – can lead to disappointment and misinterpretations.

- Self-driving cars, for example, are still under development and are not fully autonomous in all situations. Over-reliance on their capabilities can be dangerous.

- AI assistants can be helpful tools, but they are not capable of understanding complex human emotions or providing truly personalized support in every situation.

The Future of AI Learning

Research into AI is constantly evolving. Advancements in neural networks, reinforcement learning, and transfer learning hold promise for creating more sophisticated and adaptable AI systems.

- Reinforcement learning allows AI to learn through trial and error, potentially leading to more robust and adaptable systems.

- Transfer learning enables AI models trained on one task to apply their knowledge to other related tasks, increasing efficiency and reducing the need for massive datasets.

Conclusion

AI does not learn like humans; its "learning" is based on pattern recognition within existing data, leading to limitations in adaptability, common sense reasoning, and generalizability. Current AI technology is powerful but has significant limitations that users must understand to avoid unrealistic expectations and promote the responsible development of AI. Understanding the true nature of AI learning is crucial for navigating the increasingly AI-powered world. By critically evaluating the claims surrounding AI and its capabilities, you can make informed decisions and contribute to the responsible development and deployment of artificial intelligence.

Featured Posts

-

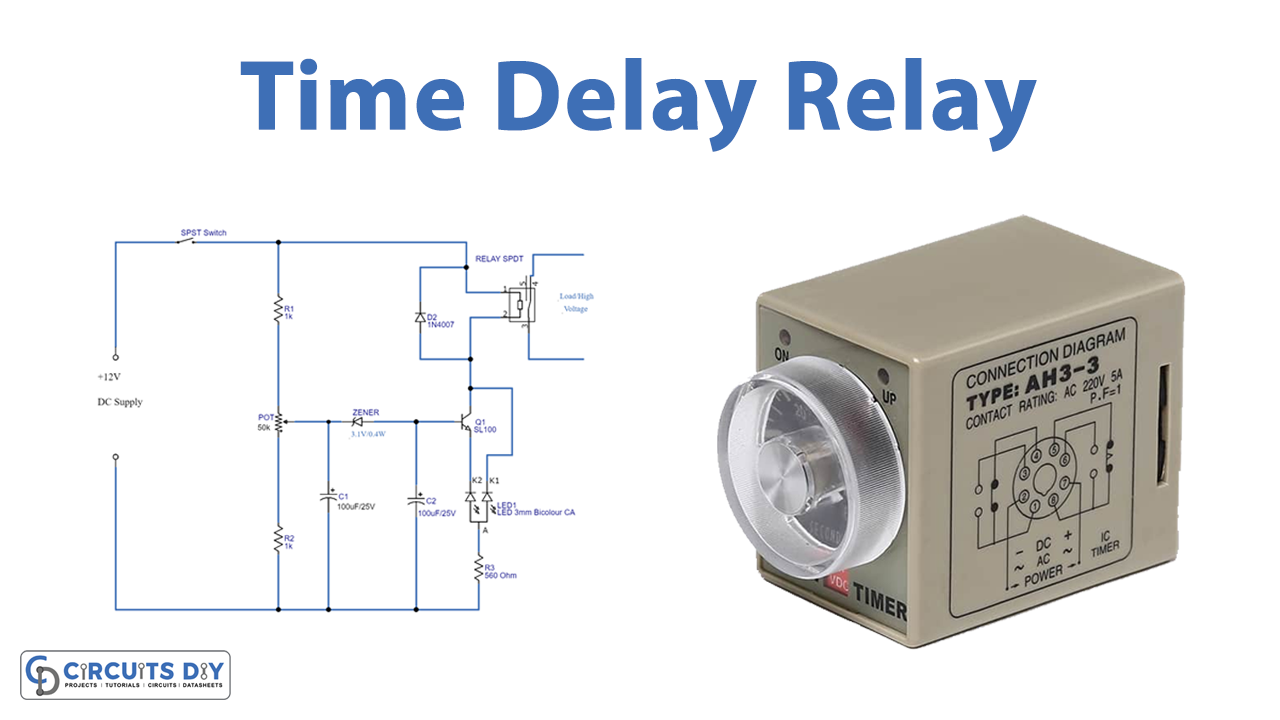

Progressive Field Weather Twins Guardians Game Start Time Delay Update April 29

May 31, 2025

Progressive Field Weather Twins Guardians Game Start Time Delay Update April 29

May 31, 2025 -

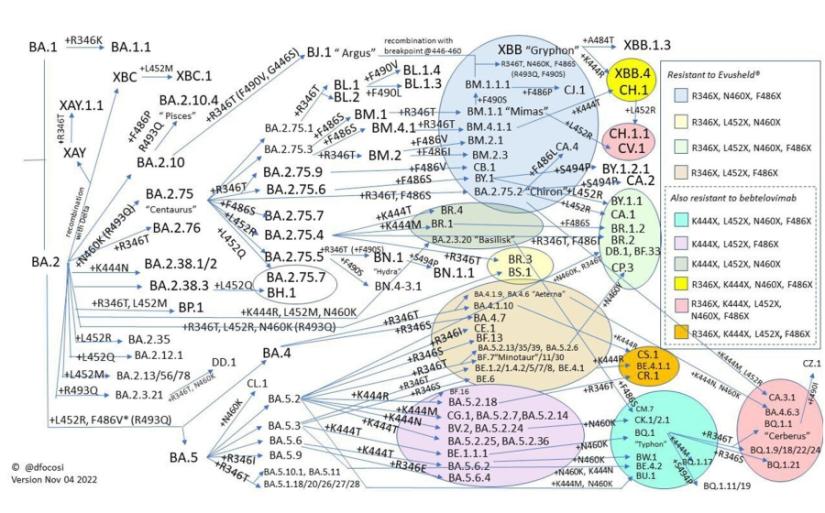

Understanding The Risk Insacogs Report On New Covid Variants Ba 1 And Lf 7 In India

May 31, 2025

Understanding The Risk Insacogs Report On New Covid Variants Ba 1 And Lf 7 In India

May 31, 2025 -

Alexander Zverev Secures Munich Semifinal Spot

May 31, 2025

Alexander Zverev Secures Munich Semifinal Spot

May 31, 2025 -

Two Week Trial Offer Free Accommodation To Attract New Residents To Germany

May 31, 2025

Two Week Trial Offer Free Accommodation To Attract New Residents To Germany

May 31, 2025 -

Flowers Miley Cyrus Czy To Zapowiedz Nowego Albumu

May 31, 2025

Flowers Miley Cyrus Czy To Zapowiedz Nowego Albumu

May 31, 2025