AI Therapy And The Surveillance State: Exploring The Risks

Table of Contents

Data Privacy Concerns in AI Therapy Platforms

AI therapy platforms, while offering convenience and potential benefits, raise serious concerns about data privacy. The very nature of these platforms necessitates the collection of extensive personal data, creating vulnerabilities that need careful consideration.

Data Collection and Storage

AI therapy apps collect a wealth of sensitive information, including:

- Voice recordings: Detailed audio recordings of therapy sessions.

- Text messages: Written communications between the user and the AI.

- Emotional responses: Data points inferred from voice tone, word choice, and response times.

- Biometric data: Some apps may integrate with wearables to collect physiological data.

These data points, stored digitally, are vulnerable to hacking, data breaches, and unauthorized access. Many platforms lack transparency regarding their data storage practices, leaving users in the dark about the security measures in place. The lack of clear data usage policies further exacerbates these AI therapy data privacy concerns. The question of secure data storage AI within this context is paramount.

Third-Party Data Sharing

The potential for sharing AI therapy data with third parties is particularly alarming. This data could be accessed by:

- Insurance companies: Used to assess risk and determine coverage.

- Employers: Potentially influencing hiring or promotion decisions.

- Law enforcement: Requested under warrants or court orders.

This third-party access to AI healthcare data significantly undermines patient confidentiality and raises serious ethical and legal implications. The potential for AI therapy data breaches and subsequent misuse poses a considerable risk to individuals seeking mental healthcare.

Algorithmic Bias and Discrimination in AI Therapy

The algorithms powering AI therapy platforms are not immune to bias. In fact, they can perpetuate and amplify existing societal inequalities.

Bias in AI Models

AI models are trained on vast datasets, which may reflect existing societal biases related to:

- Race: Leading to misinterpretations of symptoms based on racial background.

- Gender: Resulting in biased diagnoses or treatment recommendations.

- Socioeconomic status: Potentially overlooking important contextual factors.

This AI bias in mental healthcare can lead to inaccurate diagnoses, ineffective treatments, and further marginalization of already vulnerable communities. Achieving algorithmic fairness in AI therapy requires careful attention to data collection, model training, and ongoing monitoring. Equity in AI mental health demands a proactive approach to mitigate these risks.

Lack of Human Oversight

The reliance on AI without sufficient human oversight poses significant risks. Many situations demand the nuanced judgment and empathy of a human clinician:

- Suicidal ideation: Prompt intervention may be critical and require human assessment.

- Complex mental health conditions: AI may not be equipped to handle multifaceted cases effectively.

- Ethical dilemmas: AI may struggle to navigate complex ethical scenarios.

The lack of human oversight in AI therapy can lead to misdiagnosis, inappropriate treatment, and potentially dangerous outcomes. The need for human oversight AI therapy is crucial to ensure responsible development and deployment. The field must prioritize AI assisted therapy ethics to guide the responsible implementation of these technologies.

The Potential for Misuse by Governments and Corporations

The data collected by AI therapy platforms could be misused by governments and corporations for surveillance and control.

Surveillance and Social Control

AI therapy surveillance risks extend beyond individual privacy violations. Governments might leverage this data for:

- Surveillance of dissidents: Identifying individuals expressing critical viewpoints.

- Social control: Targeting individuals deemed "at risk" based on their mental health data.

- Predictive policing: Using data to anticipate and prevent (potential) criminal behavior.

This government surveillance mental health data represents a significant threat to civil liberties and the erosion of trust in mental healthcare systems.

Manipulation and Profiling

Corporations could use this data for targeted advertising and manipulation:

- Personalized advertising: Targeting individuals with mental health vulnerabilities.

- Profiling: Creating detailed psychological profiles for marketing purposes.

AI therapy manipulation of this kind raises profound ethical concerns about individual autonomy and privacy. The psychological profiling AI capabilities create the potential for significant abuse. The ethical implications of AI in mental health must be considered at every stage of development and implementation.

Conclusion

AI therapy holds tremendous potential for improving access to mental healthcare. However, the risks associated with AI therapy surveillance, data privacy, algorithmic bias, and potential misuse by powerful entities are substantial and cannot be ignored. We need robust regulations, ethical guidelines, and transparent practices to ensure AI therapy benefits society while protecting individual rights and preventing the creation of a surveillance state. Further research and public discussion on the ethical implications of AI therapy surveillance are crucial to harnessing its benefits responsibly. Let’s work together to ensure that AI therapy remains a tool for healing, not a weapon of control.

Featured Posts

-

Microsoft Stock A Safe Haven Amidst Tariff Turmoil

May 16, 2025

Microsoft Stock A Safe Haven Amidst Tariff Turmoil

May 16, 2025 -

Yankees Vs Padres Prediction Who Will Win This Crucial Series

May 16, 2025

Yankees Vs Padres Prediction Who Will Win This Crucial Series

May 16, 2025 -

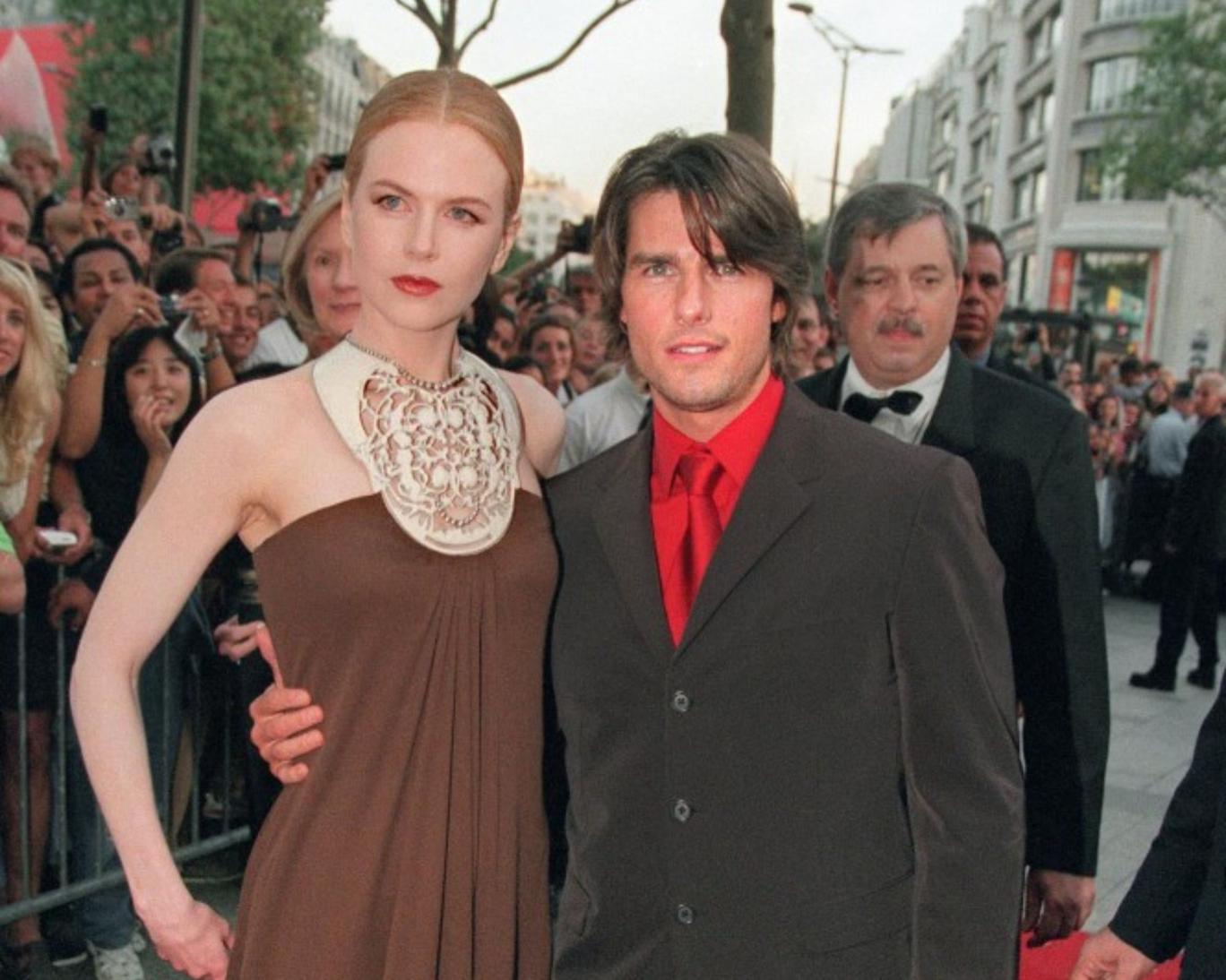

Adakar Tam Krwz Awr Ayk Khatwn Mdah Ka Hyran Kn Waqeh

May 16, 2025

Adakar Tam Krwz Awr Ayk Khatwn Mdah Ka Hyran Kn Waqeh

May 16, 2025 -

Padres And Dodgers A Clash Of Baseball Strategies

May 16, 2025

Padres And Dodgers A Clash Of Baseball Strategies

May 16, 2025 -

San Jose Earthquakes Upset Portland Timbers Ending Winning Run

May 16, 2025

San Jose Earthquakes Upset Portland Timbers Ending Winning Run

May 16, 2025