AI Therapy: Surveillance In A Police State? A Critical Examination

Table of Contents

The rapid advancement of artificial intelligence (AI) is transforming numerous sectors, including mental healthcare. AI therapy, offering accessible and potentially personalized treatment, is gaining traction. However, this technological leap raises serious concerns about its potential misuse as a surveillance tool within a police state. This article critically examines the ethical and societal implications of AI therapy, exploring its potential benefits alongside its inherent risks to individual privacy and freedom.

The Promise and Peril of AI Therapy

The integration of AI into mental healthcare presents a complex duality: immense potential benefits coupled with significant ethical and societal risks. Understanding both sides is crucial for responsible development and implementation.

Potential Benefits of AI-Powered Mental Healthcare

AI therapy offers several advantages in addressing the global mental health crisis:

- Increased Accessibility: AI-powered platforms can provide mental health services to remote or underserved communities lacking access to traditional care. This is especially vital in rural areas or developing nations with limited mental health professionals.

- Personalized Treatment: AI algorithms can analyze patient data to tailor treatment plans to individual needs, preferences, and responses, potentially leading to more effective outcomes. This personalized approach can address the unique challenges faced by each individual.

- 24/7 Availability and Reduced Stigma: AI-powered chatbots and apps offer readily available support, reducing the stigma often associated with seeking professional help. Users can engage with therapy at their own pace and convenience, breaking down barriers to entry.

- Objective Data Collection: AI tools can objectively track patient progress, providing valuable data for monitoring treatment effectiveness and identifying areas for improvement. This objective data can supplement clinical judgment and enhance treatment decisions.

- Early Detection and Intervention: AI algorithms may be able to detect early warning signs of mental health crises, enabling timely intervention and potentially preventing escalation. This proactive approach can be life-saving for individuals at risk.

The Surveillance Threat of AI in Mental Healthcare

While AI offers transformative potential, its application in mental healthcare raises significant surveillance concerns:

- Data Privacy Violations: The vast amount of sensitive personal data collected by AI therapy platforms raises substantial privacy concerns. Breaches could have devastating consequences for individuals.

- Misuse by Authorities: There's a risk that law enforcement or other authorities could access and misuse this sensitive mental health data, potentially violating patient confidentiality and civil liberties.

- Lack of Algorithmic Transparency: The "black box" nature of many AI algorithms makes it difficult to understand how diagnoses and treatment recommendations are generated, raising concerns about fairness and accountability.

- Algorithmic Bias: AI algorithms trained on biased data can perpetuate and amplify existing inequalities in mental healthcare access and treatment outcomes, disproportionately affecting marginalized groups.

- Predictive Policing: The potential exists for AI-generated mental health data to be integrated into predictive policing algorithms, leading to discriminatory profiling and targeting of vulnerable populations.

Ethical Implications and Privacy Concerns

The ethical considerations surrounding AI therapy are paramount, demanding careful attention to data security and patient autonomy.

Data Security and Breach Risks

Protecting sensitive mental health data is crucial. Robust security measures are essential to prevent breaches:

- Vulnerability to Hacking: AI therapy platforms are vulnerable to hacking and unauthorized access, demanding robust cybersecurity protocols.

- Encryption and Data Protection: Strong encryption protocols and data anonymization techniques are crucial for protecting patient confidentiality.

- Legal Frameworks and Regulations: Comprehensive legal frameworks and regulations are needed to govern the collection, storage, and use of AI therapy data, ensuring compliance and accountability.

Informed Consent and Patient Autonomy

True informed consent is essential for ethical AI therapy:

- Transparency and Understanding: Patients must fully understand how their data will be collected, used, and protected before consenting to AI therapy.

- Control Over Data: Patients should have control over their data, with the right to access, modify, or delete their information.

- Meaningful Consent: Consent must be freely given, informed, and specific to each aspect of data usage, not a blanket agreement.

AI Therapy and the Police State: A Realistic Scenario?

The potential for AI therapy data to be used for surveillance and social control necessitates critical examination.

Integration with Law Enforcement and Predictive Policing

The integration of mental health data with law enforcement systems raises significant ethical dilemmas:

- Predictive Policing Risks: AI-driven predictive policing based on mental health data could lead to discriminatory targeting of individuals.

- Ethical Profiling: Profiling individuals based on their mental health data is ethically problematic and raises concerns about bias and discrimination.

- Vulnerable Populations: Marginalized communities could be disproportionately affected by such practices, exacerbating existing inequalities.

Erosion of Civil Liberties and Freedoms

The misuse of AI therapy data could significantly erode civil liberties:

- Surveillance and Social Control: AI therapy could be used as a tool for mass surveillance and social control, chilling free expression and dissent.

- Discouraging Help-Seeking: Concerns about privacy could deter individuals from seeking necessary mental healthcare, exacerbating mental health issues.

- Oversight and Accountability: Strong oversight mechanisms and ethical guidelines are crucial to prevent the misuse of AI therapy and protect individual freedoms.

Conclusion

AI therapy presents a double-edged sword. While it offers immense potential for improving access to and personalizing mental healthcare, its inherent risks, particularly concerning surveillance and the potential creation of a police state through data misuse, cannot be ignored. Addressing these ethical and privacy concerns is paramount. Robust regulations, transparent algorithms, and strong data protection measures are essential to ensure that AI therapy benefits society without compromising individual liberties. We must critically examine the development and implementation of AI therapy to prevent its misuse and protect the fundamental rights of individuals seeking mental health support. Let’s work together to ensure AI therapy remains a tool for healing, not a weapon of surveillance in a police state. Let’s foster a future where AI therapy prioritizes ethical considerations and patient well-being above all else.

Featured Posts

-

Gol Ovechkina Ne Pomog Vashingtonu V Pley Off N Kh L

May 16, 2025

Gol Ovechkina Ne Pomog Vashingtonu V Pley Off N Kh L

May 16, 2025 -

Belgica Vs Portugal 0 1 Cronica Goles Y Analisis Del Partido

May 16, 2025

Belgica Vs Portugal 0 1 Cronica Goles Y Analisis Del Partido

May 16, 2025 -

Nba Playoffs Jimmy Butlers Game 6 Predictions For Rockets Vs Warriors

May 16, 2025

Nba Playoffs Jimmy Butlers Game 6 Predictions For Rockets Vs Warriors

May 16, 2025 -

Microsoft Stock A Safe Haven Amidst Tariff Turmoil

May 16, 2025

Microsoft Stock A Safe Haven Amidst Tariff Turmoil

May 16, 2025 -

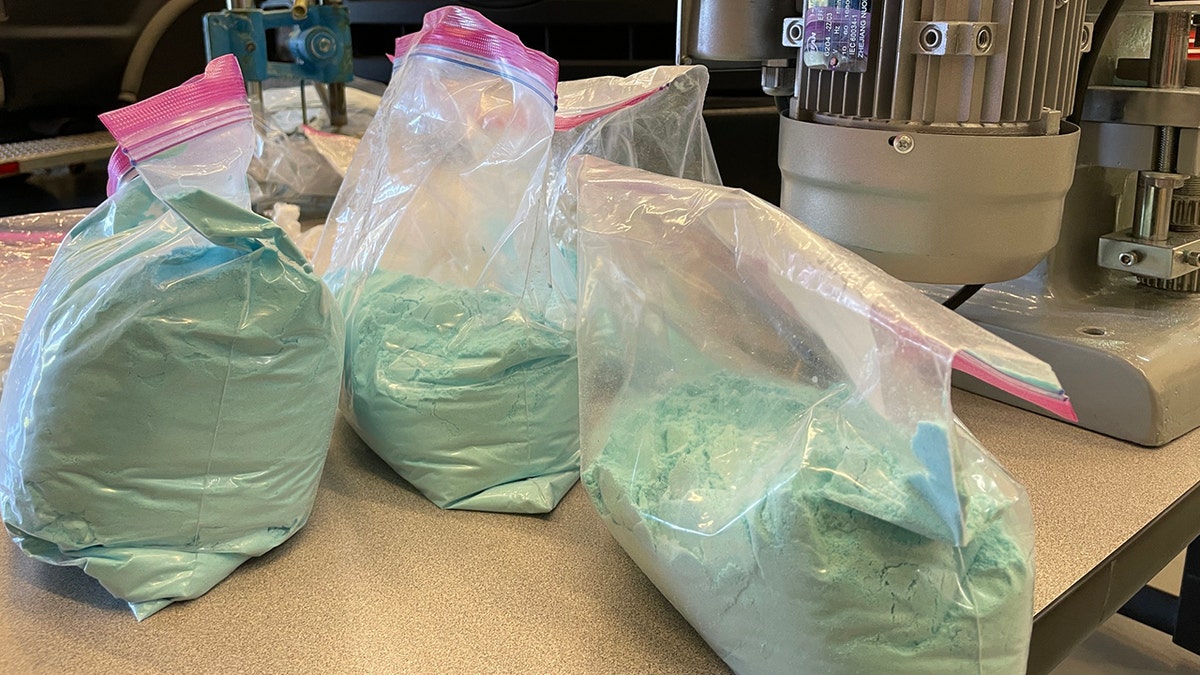

Chinas Fentanyl Problem A Price To Pay Former Us Envoy Weighs In

May 16, 2025

Chinas Fentanyl Problem A Price To Pay Former Us Envoy Weighs In

May 16, 2025