Algorithms, Radicalization, And Mass Violence: Who Is Responsible?

Table of Contents

The Role of Algorithms in Amplifying Extremist Content

Algorithms, designed to maximize user engagement, inadvertently contribute to the spread of extremist ideologies. This occurs through several key mechanisms:

Algorithmic Bias and Filter Bubbles

Algorithms, often trained on biased data, can create echo chambers and filter bubbles. This means users are primarily exposed to information confirming their existing beliefs, even if those beliefs are extremist. This reinforcement can lead to radicalization.

- Examples of algorithmic bias: Algorithms may prioritize sensationalist or emotionally charged content, disproportionately amplifying extremist viewpoints.

- Studies on echo chambers: Numerous studies demonstrate the correlation between algorithmic personalization and the formation of echo chambers, where extremist views are reinforced and alternative perspectives are suppressed.

- Impact of personalized recommendations on radicalization: Personalized recommendations can lead individuals down a "rabbit hole" of increasingly extreme content, accelerating the radicalization process.

Recommendation Systems and the Spread of Misinformation

Recommendation algorithms, designed to keep users engaged, often prioritize the spread of misinformation and propaganda. This can be far more effective than traditional media, as algorithms personalize the delivery of harmful content.

- Case studies of how algorithms amplified extremist narratives: Several documented cases demonstrate how algorithms have amplified extremist narratives, leading to real-world violence.

- The role of clickbait and sensationalism: Clickbait headlines and sensationalized content often attract more clicks, inadvertently boosting the visibility of extremist materials.

- The difficulty of content moderation: Moderating online content at scale is extremely challenging, making it difficult for platforms to effectively remove extremist materials before they reach a wide audience.

The "Algorithmic Acceleration" of Radicalization

Algorithms can significantly accelerate the radicalization process. They connect individuals with like-minded extremists and provide access to resources promoting violence.

- Examples of online communities used for radicalization: Online forums, chat groups, and social media groups provide breeding grounds for extremist ideologies.

- The role of online forums and chat groups: These spaces offer anonymity and allow for the easy dissemination of extremist propaganda and recruitment efforts.

- The impact of anonymity: Anonymity online emboldens individuals to express extremist views and engage in harmful behavior that they might not otherwise consider.

The Responsibility of Social Media Platforms

Social media platforms bear significant responsibility in preventing the spread of extremist content. However, the task is complex and fraught with ethical and legal challenges.

Platform Liability and Content Moderation

Social media companies face increasing pressure to moderate content effectively, balancing freedom of speech with the need to prevent violence. The legal frameworks surrounding online hate speech and the liability of platforms remain a contentious area.

- Challenges of content moderation: The sheer volume of content uploaded daily makes manual moderation nearly impossible, creating a constant struggle against harmful content.

- Legal frameworks concerning online hate speech: Laws vary significantly across jurisdictions, creating challenges for global platforms in enforcing consistent standards.

- The debate around freedom of speech vs. public safety: Striking a balance between these competing values is a constant challenge for both platforms and governments.

Transparency and Algorithmic Accountability

The lack of transparency around algorithm design hinders efforts to understand and mitigate the risks. Greater accountability is needed.

- Calls for algorithmic audits: Independent audits of algorithms can help identify biases and vulnerabilities that could be exploited to spread extremism.

- The need for public access to algorithm information: Greater transparency in algorithm design could allow researchers and policymakers to better understand how these systems operate and identify potential harms.

- The role of government regulation: Government regulation may be necessary to ensure greater transparency and accountability from social media companies.

Profit vs. Public Safety

The business model of many social media platforms incentivizes engagement, even if that engagement involves the spread of harmful content. This creates a fundamental conflict between profit maximization and public safety.

- The business model of social media platforms: The "attention economy" model prioritizes user engagement above all else, potentially at the expense of safety.

- The pressure to prioritize growth over safety: The pressure to attract and retain users can lead to prioritizing growth over safety measures.

- Ethical considerations for platform owners: Platform owners have an ethical responsibility to minimize the harms caused by their platforms.

The Role of Individual Users and Cognitive Biases

Individual users also play a significant role. Cognitive biases and a lack of media literacy contribute to vulnerability to extremist ideologies.

Confirmation Bias and the Search for Belonging

Cognitive biases, such as confirmation bias (the tendency to favor information confirming existing beliefs), make individuals susceptible to extremist ideologies. The appeal of belonging to a group can also be a powerful driver of radicalization.

- Psychological factors influencing radicalization: Understanding these factors is crucial in developing effective counter-radicalization strategies.

- The appeal of extremist groups: Extremist groups often offer a sense of belonging and purpose, which can be particularly attractive to individuals feeling marginalized or disenfranchised.

- The influence of online communities on identity formation: Online communities can play a significant role in shaping individuals' identities and beliefs.

Media Literacy and Critical Thinking

Improving media literacy and critical thinking skills is essential in combating online radicalization. Individuals must be equipped to critically evaluate online information and identify misinformation.

- Strategies for identifying misinformation: Education on identifying misinformation and propaganda techniques is vital.

- Tips for evaluating online sources: Learning to assess the credibility and bias of online sources is a crucial skill.

- The role of education in fostering critical thinking: Educational programs can equip individuals with the critical thinking skills needed to resist extremist narratives.

Personal Responsibility in Online Spaces

Users have a responsibility to engage critically with online information and report harmful content. This includes understanding the ethical implications of online participation and acting responsibly.

- The ethics of online participation: Users should consider the potential consequences of their actions online and strive to create a more positive and inclusive online environment.

- The importance of reporting hate speech and extremist content: Reporting such content is crucial in helping platforms remove harmful materials.

- The role of bystanders: Bystanders have a responsibility to challenge extremist views and report harmful content.

The Governmental Role in Regulation and Prevention

Governments play a crucial role in regulating online extremism and protecting vulnerable individuals. This includes legislation, funding for research, and international collaboration.

Legislation and Policy Responses

Governments must enact and enforce legislation that addresses online hate speech, terrorism, and the spread of extremist ideologies. International cooperation is critical due to the global nature of the internet.

- Examples of laws addressing online hate speech and terrorism: Existing laws vary widely in their effectiveness and scope.

- International cooperation on combating online extremism: International collaboration is essential to address the transnational nature of online extremism.

- The challenges of regulating the internet: Regulating the internet effectively without impinging on freedom of speech is a significant challenge.

Funding for Research and Intervention Programs

Increased funding is needed for research into online radicalization and the development of effective prevention and intervention programs.

- Examples of successful intervention programs: Successful programs often involve a multi-faceted approach combining education, community engagement, and mental health support.

- The need for multi-disciplinary research: Addressing this complex issue requires collaboration between researchers from various disciplines, including computer science, psychology, sociology, and law.

- The importance of prevention efforts: Preventing radicalization before it occurs is far more effective than trying to counter it after the fact.

International Collaboration and Information Sharing

International collaboration is crucial for sharing information about effective strategies and coordinating efforts to combat online extremism.

- The challenges of cross-border collaboration: Differences in legal frameworks and approaches can create challenges for cross-border collaboration.

- The role of international organizations: International organizations can play a key role in facilitating information sharing and coordinating efforts.

- The importance of information sharing: Sharing best practices and lessons learned across countries is vital for developing more effective strategies.

Conclusion

The issue of algorithms, radicalization, and mass violence is a complex one with no easy answers. Shared responsibility rests on algorithm designers who must build systems that prioritize safety, social media platforms that must implement robust content moderation strategies, individual users who must cultivate critical thinking skills, and governments who must create effective regulations and support research. Understanding the interplay between these factors requires a collaborative effort. Let's work together to hold all parties accountable and prevent future tragedies fueled by unchecked online extremism. We must promote media literacy, advocate for responsible algorithm design, and support policies that address online extremism effectively.

Featured Posts

-

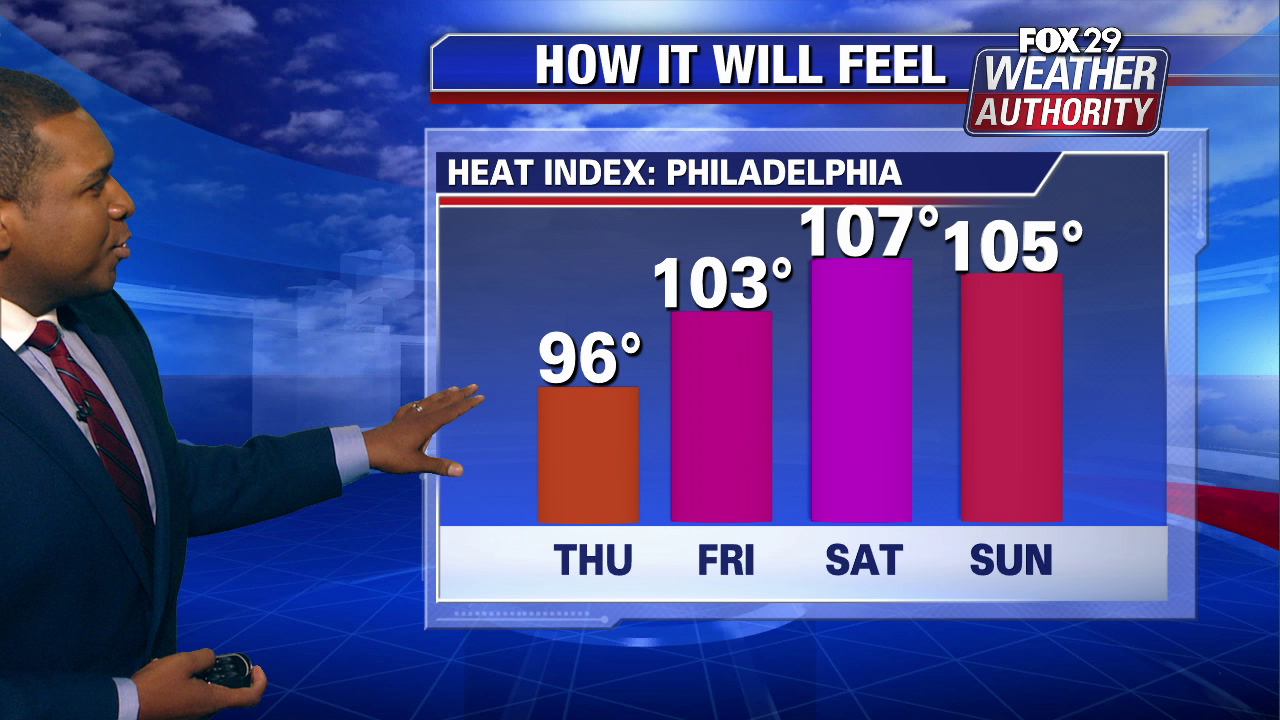

Reasons For The Omission Of Excessive Heat Warnings In Weather Predictions

May 30, 2025

Reasons For The Omission Of Excessive Heat Warnings In Weather Predictions

May 30, 2025 -

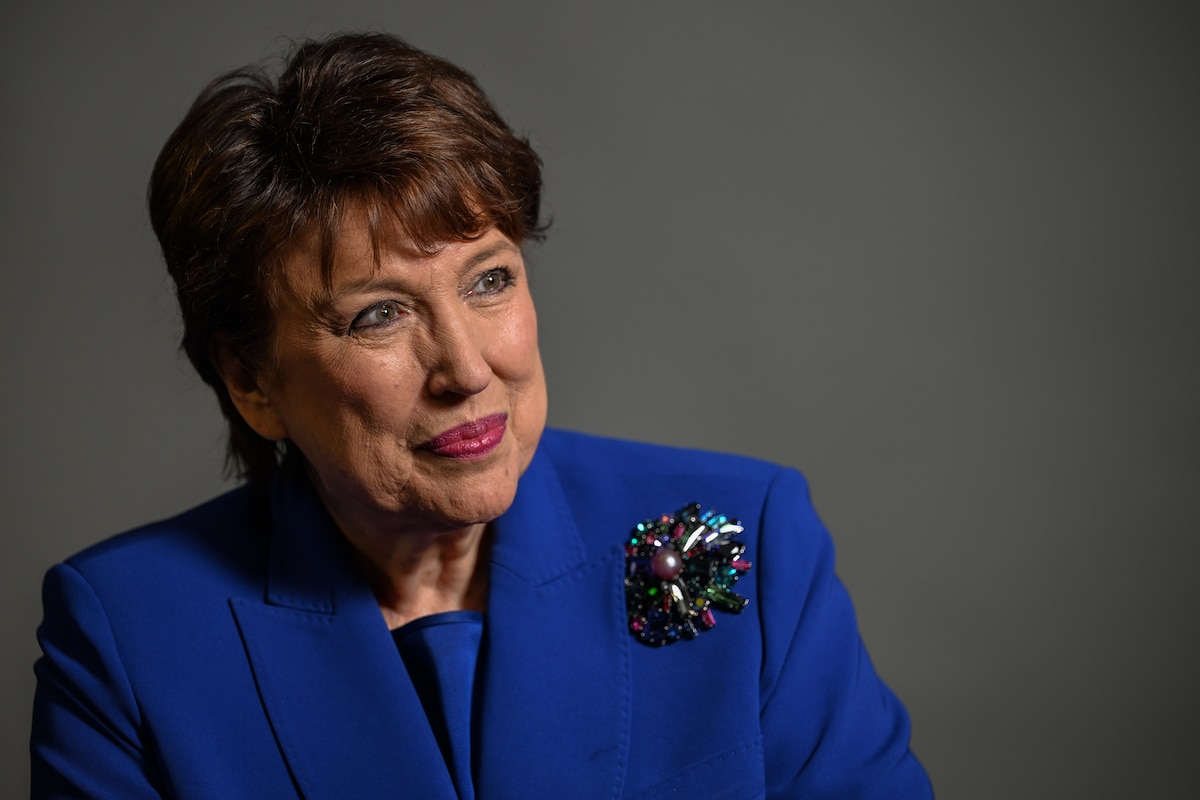

Analyse Du Commentaire De Jacobelli Sur La Situation Juridique De Marine Le Pen

May 30, 2025

Analyse Du Commentaire De Jacobelli Sur La Situation Juridique De Marine Le Pen

May 30, 2025 -

Ticketmaster Y Su Virtual Venue Una Nueva Era En La Compra De Entradas

May 30, 2025

Ticketmaster Y Su Virtual Venue Una Nueva Era En La Compra De Entradas

May 30, 2025 -

Roland Garros Ruud Withdraws After Knee Pain Loses To Borges

May 30, 2025

Roland Garros Ruud Withdraws After Knee Pain Loses To Borges

May 30, 2025 -

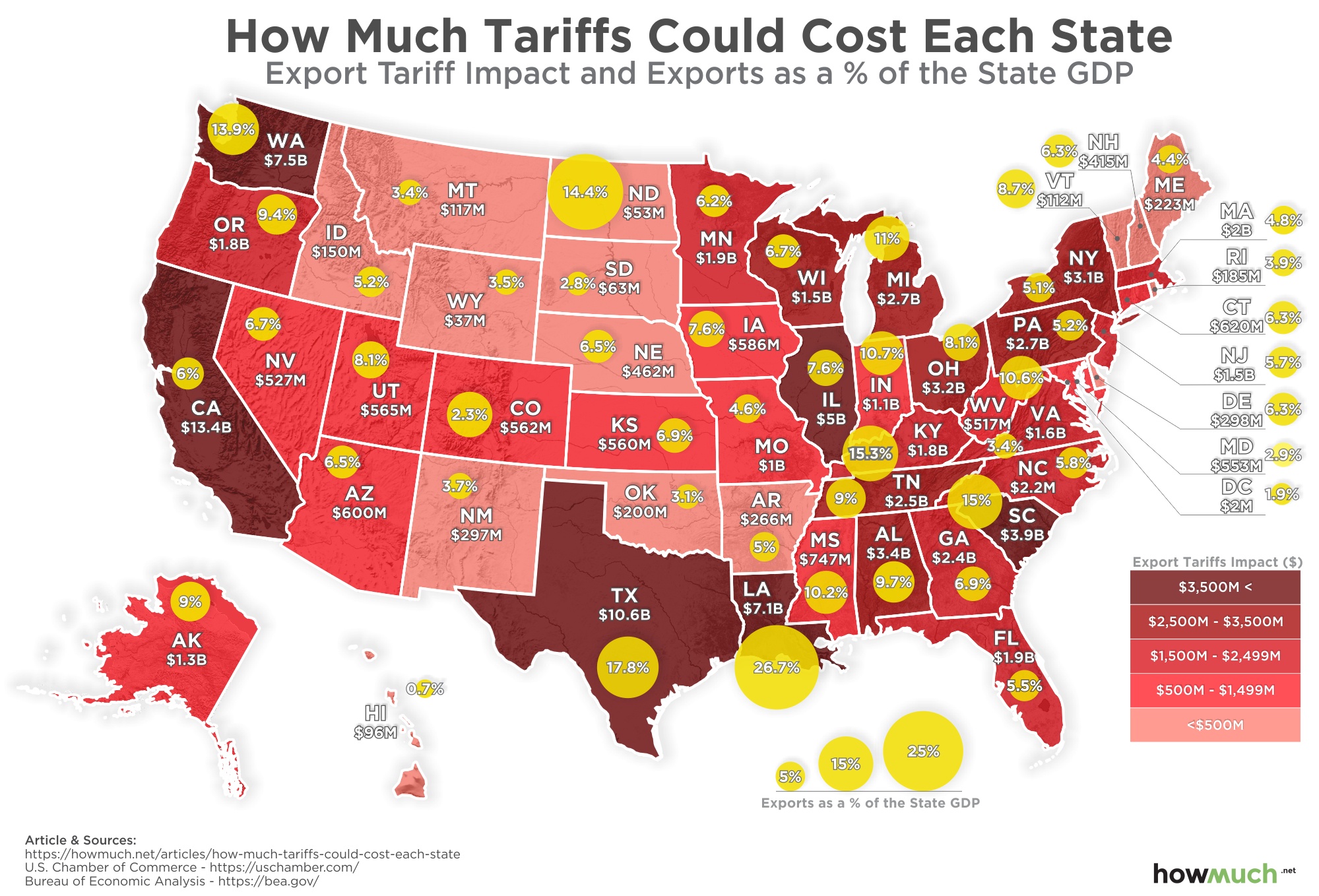

Southeast Asia Solar Exports Face New Us Tariffs Industry Implications

May 30, 2025

Southeast Asia Solar Exports Face New Us Tariffs Industry Implications

May 30, 2025

Latest Posts

-

Doubleheader Details Released By Tigers After Fridays Postponed Game

May 31, 2025

Doubleheader Details Released By Tigers After Fridays Postponed Game

May 31, 2025 -

Musician Jack Whites Guest Spot On Tigers Game Broadcast Baseball And Cooperstown

May 31, 2025

Musician Jack Whites Guest Spot On Tigers Game Broadcast Baseball And Cooperstown

May 31, 2025 -

Detroit Tigers Game Features Jack White A Conversation On Baseball And Cooperstown

May 31, 2025

Detroit Tigers Game Features Jack White A Conversation On Baseball And Cooperstown

May 31, 2025 -

Jack White Joins Detroit Tigers Broadcast Hall Of Fame Talk And Baseball Insights

May 31, 2025

Jack White Joins Detroit Tigers Broadcast Hall Of Fame Talk And Baseball Insights

May 31, 2025 -

Jack Whites Detroit Tigers Broadcast Appearance Discussing Baseball And The Hall Of Fame

May 31, 2025

Jack Whites Detroit Tigers Broadcast Appearance Discussing Baseball And The Hall Of Fame

May 31, 2025