The Illusion Of Intelligence: Unveiling The Reality Of AI's "Thought" Processes

Table of Contents

AI's Dependence on Data: The Foundation of "Intelligence"

The foundation of AI's "intelligence" lies in its dependence on massive datasets. Machine learning and deep learning algorithms, the cornerstones of modern AI, are essentially sophisticated statistical engines that identify patterns within enormous amounts of data. The more data these algorithms are trained on, the better they become at performing specific tasks. This data-driven nature is crucial to understanding the illusion of intelligence.

- Examples of large datasets: ImageNet, a massive database of over 14 million images, is frequently used to train image recognition algorithms. Similar vast datasets exist for natural language processing (NLP), enabling AI to understand and generate human language.

- The impact of data bias: AI systems are only as good as the data they are trained on. Biased datasets can lead to biased AI outputs, perpetuating and even amplifying existing societal inequalities. This highlights a critical limitation of the current state of AI.

- Limitations with unseen data: When faced with data that significantly differs from its training data—a phenomenon known as "out-of-distribution" data—AI's performance can drastically decline. This inability to generalize knowledge and adapt to novel situations exposes the artificial nature of its "intelligence."

The Algorithmic Reality: Behind the Curtain of AI "Thinking"

Contrary to popular imagination, AI doesn't "think" in the human sense. It doesn't possess consciousness, emotions, or genuine understanding. Instead, AI operates by following complex algorithms—precise sets of instructions that dictate how it processes information and generates outputs.

- AI as a sophisticated calculator: Think of AI as an incredibly advanced calculator capable of performing mind-bogglingly complex computations. While it can produce impressive results, it lacks the fundamental understanding and reasoning capabilities of a human mind.

- Algorithm types and information processing: Algorithms like decision trees, support vector machines, and neural networks are used in different AI applications. These algorithms process input data according to their specific rules, ultimately arriving at a conclusion or prediction.

- Probability and statistical inference: AI's decision-making often relies on probability and statistical inference. It identifies patterns and trends in data, then uses these to make predictions or classifications, often expressed as probabilities rather than certainties.

Narrow vs. General AI: Understanding the Scope of AI Capabilities

It's crucial to differentiate between two types of AI: narrow (weak) AI and general (strong) AI. Current AI systems are predominantly narrow AI, designed to excel at specific tasks. General AI, on the other hand, would possess human-level intelligence and adaptability.

- Examples of narrow AI: Image recognition systems, spam filters, and recommendation engines are all examples of narrow AI, excelling in their respective domains but lacking the broader cognitive abilities of humans.

- Challenges and ethical implications of general AI: The development of general AI presents immense technological challenges and significant ethical considerations. Questions of control, safety, and potential societal disruption must be addressed proactively.

- Current research in general AI: Research into general AI is ongoing, with various approaches being explored, including advancements in neural networks, reinforcement learning, and cognitive architectures. However, true general AI remains a distant prospect.

The Ethical Implications of Misinterpreting AI Intelligence

Anthropomorphizing AI—attributing human-like qualities to it—can lead to unrealistic expectations and potentially harmful consequences. Overestimating its capabilities can result in over-reliance and a failure to critically assess its outputs.

- Overreliance in critical decision-making: Depending solely on AI for critical decisions without human oversight can be risky, especially when the AI's limitations are not fully appreciated.

- Transparency and explainability: Ensuring transparency and explainability in AI systems is vital to building trust and understanding their decision-making processes. "Black box" AI systems, where the decision-making process is opaque, raise serious concerns.

- Responsible AI development and deployment: Responsible AI development requires careful consideration of ethical implications, bias mitigation, and robust testing to minimize potential harm.

Conclusion: Separating Fact from Fiction in AI "Thought"

This article has explored the "Illusion of Intelligence" surrounding AI, revealing its dependence on data and algorithms, its current limitations, and the ethical responsibilities surrounding its development and deployment. While AI's capabilities are impressive, it's crucial to remember that it operates according to pre-programmed rules and lacks genuine understanding or consciousness. The current advancements in AI are more akin to sophisticated tools rather than sentient beings. Continue to explore the fascinating world of AI, but always remember to critically examine claims of AI intelligence and seek credible sources to understand its true capabilities. Understanding the limitations of AI is critical to harnessing its potential responsibly and avoiding the pitfalls of the illusion of intelligence.

Featured Posts

-

The D C Blackhawk Passenger Jet Crash A Comprehensive Report

Apr 29, 2025

The D C Blackhawk Passenger Jet Crash A Comprehensive Report

Apr 29, 2025 -

Urgent Appeal Help Find Missing British Paralympian In Las Vegas

Apr 29, 2025

Urgent Appeal Help Find Missing British Paralympian In Las Vegas

Apr 29, 2025 -

Are Stretched Stock Market Valuations Justified Bof As View

Apr 29, 2025

Are Stretched Stock Market Valuations Justified Bof As View

Apr 29, 2025 -

Top Universities Unite In Private Collective Against Trump Policies

Apr 29, 2025

Top Universities Unite In Private Collective Against Trump Policies

Apr 29, 2025 -

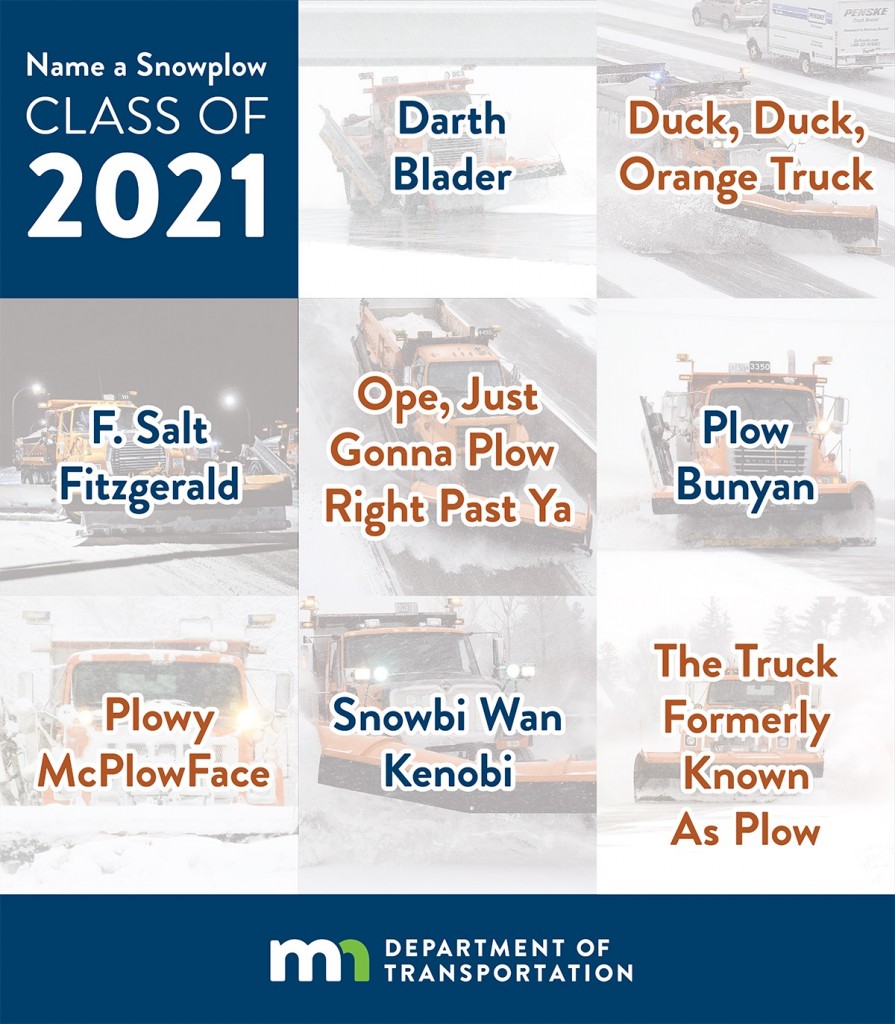

2024 Minnesota Snow Plow Naming Contest Winners Revealed

Apr 29, 2025

2024 Minnesota Snow Plow Naming Contest Winners Revealed

Apr 29, 2025