The Limits Of AI Learning: A Guide To Responsible AI Development And Deployment

Table of Contents

Data Dependency and Bias in AI Systems

AI systems, at their core, are trained on data. The quality, quantity, and representativeness of this data directly impact the AI's performance and accuracy. A crucial aspect of understanding the limits of AI learning lies in recognizing the profound influence of data bias. Bias can creep in at various stages, leading to unfair or discriminatory outcomes.

Different types of bias significantly impact AI outcomes:

- Sampling Bias: Occurs when the training data doesn't accurately reflect the real-world population, leading to skewed predictions. For example, an AI trained primarily on data from one demographic might perform poorly on others.

- Algorithmic Bias: This arises from the design of the algorithm itself, even when the data appears unbiased. Certain algorithms might inherently favor specific outcomes or amplify existing biases in the data.

- Measurement Bias: This occurs when the data collection process itself is flawed, introducing systematic errors. For example, biased survey questions can lead to biased data.

Real-world examples of biased AI systems abound. Facial recognition systems have shown higher error rates for individuals with darker skin tones, highlighting the consequences of biased training data. Loan application algorithms have been found to discriminate against certain demographic groups due to algorithmic bias. These instances underscore the urgent need for addressing bias in AI systems.

- Insufficient or poorly representative datasets lead to inaccurate predictions and unreliable results.

- Bias in training data perpetuates and amplifies existing societal biases, resulting in unfair or discriminatory outcomes.

- Algorithmic bias can occur even with seemingly unbiased data due to the design of the algorithm itself, requiring careful scrutiny of the AI's internal workings.

- Lack of diversity in development teams can exacerbate bias issues, as diverse perspectives are crucial in identifying and mitigating biases.

The Problem of Explainability and Transparency ("Black Box" Problem)

Many AI systems, particularly deep learning models, operate as "black boxes." Their decision-making processes are opaque, making it difficult to understand why an AI arrived at a particular conclusion. This lack of transparency presents a significant challenge in understanding the limits of AI learning. In high-stakes situations, such as medical diagnosis or loan applications, the inability to interpret AI decisions can have serious consequences.

The "black box" problem poses several challenges:

- Difficulty in debugging and improving opaque AI systems; identifying and correcting errors becomes significantly harder.

- Lack of transparency hinders the identification and correction of biases, making it difficult to ensure fairness and accountability.

- Concerns around legal and ethical accountability arise when AI decisions are unexplainable, especially when those decisions have significant consequences.

- The development of explainable AI (XAI) is crucial for building trust and accountability in AI systems. XAI aims to make the decision-making processes of AI more transparent and understandable.

The Limitations of Generalization and Transfer Learning

AI systems are trained to perform specific tasks based on the data they're given. Generalization refers to an AI's ability to perform well on unseen data—data it wasn't explicitly trained on. However, current AI systems often struggle with true generalization. They tend to perform well within the narrow context of their training data but falter when faced with significantly different situations.

Similarly, transfer learning, the process of transferring knowledge learned in one context to another, faces limitations. While promising, transfer learning isn't a magic bullet. It works best when the source and target domains are relatively similar; transferring knowledge between vastly different domains remains a challenge.

- AI models often struggle to generalize to situations significantly different from their training data, limiting their real-world applicability.

- Transfer learning, while promising, faces limitations when the source and target domains are too dissimilar, hindering the efficient reuse of learned knowledge.

- The need for continuous retraining and adaptation is crucial to maintain AI performance as the environment changes and new data becomes available.

- Robust testing and validation across diverse scenarios are essential to assess the generalization capabilities and limitations of AI systems.

Responsible AI Development and Deployment Strategies

Addressing the limits of AI learning requires a proactive approach to responsible AI development and deployment. This involves prioritizing ethical considerations, implementing bias mitigation techniques, and ensuring human oversight.

Best practices for responsible AI include:

- Establishing clear ethical principles for AI development and deployment, guiding the design, development, and use of AI systems.

- Implementing robust data validation and bias detection techniques throughout the AI lifecycle, ensuring data quality and fairness.

- Promoting diverse and inclusive teams in AI development, bringing together diverse perspectives to identify and mitigate biases.

- Ensuring continuous monitoring and evaluation of AI systems in real-world applications, allowing for iterative improvements and risk mitigation.

- Establishing clear lines of accountability for AI-driven decisions, ensuring responsibility and transparency.

Conclusion: Understanding the Limits of AI Learning for Responsible Innovation

In conclusion, understanding the limits of AI learning – including data dependency and bias, explainability challenges, and limitations of generalization – is critical for responsible AI innovation. By acknowledging these limitations and implementing robust development and deployment strategies, we can mitigate risks and build more ethical and beneficial AI systems. The ongoing need for research and development in areas such as explainable AI (XAI) and bias mitigation is paramount. By understanding the limits of AI learning and embracing responsible AI development, we can harness the power of AI while mitigating its risks and building a more equitable and beneficial future. Let's work together to ensure that AI serves humanity responsibly.

Featured Posts

-

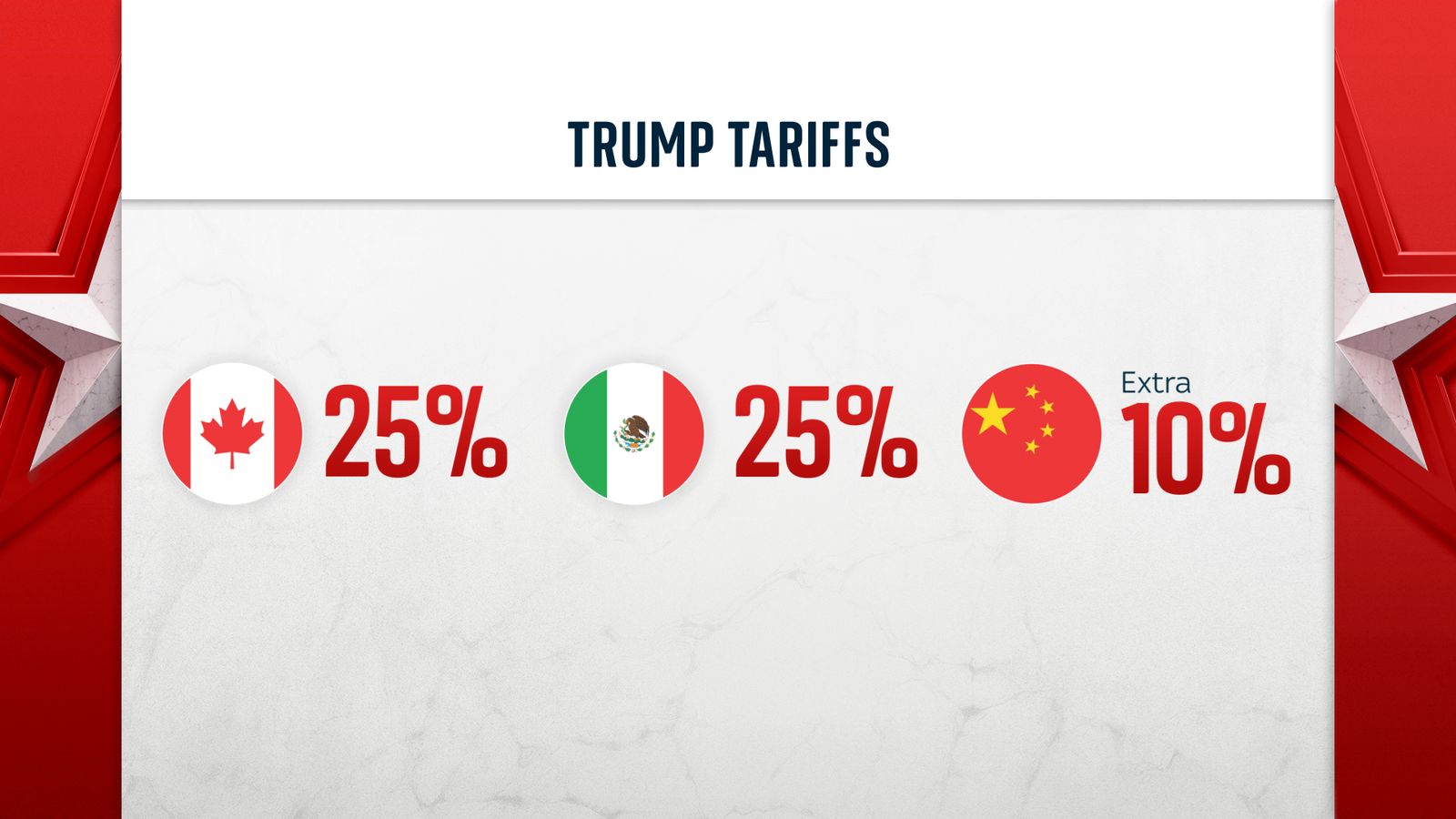

Court Blocks Trump Tariffs Whats The Backup Plan

May 31, 2025

Court Blocks Trump Tariffs Whats The Backup Plan

May 31, 2025 -

Get Ready For Fatal Fury A Riyadh Boxing Event In May

May 31, 2025

Get Ready For Fatal Fury A Riyadh Boxing Event In May

May 31, 2025 -

Climate Whiplash Cities Worldwide Confront Dangerous Impacts New Report Shows

May 31, 2025

Climate Whiplash Cities Worldwide Confront Dangerous Impacts New Report Shows

May 31, 2025 -

Canadian Red Cross Manitoba Wildfire Evacuee Support How To Help

May 31, 2025

Canadian Red Cross Manitoba Wildfire Evacuee Support How To Help

May 31, 2025 -

Cuatro Recetas De Emergencia Comida Rica Sin Luz Ni Gas

May 31, 2025

Cuatro Recetas De Emergencia Comida Rica Sin Luz Ni Gas

May 31, 2025