Why AI Doesn't Truly Learn: A Guide To Ethical AI Implementation

Table of Contents

AI's Limitations: Pattern Recognition vs. True Understanding

The core difference between human learning and AI's "learning" lies in understanding. Humans learn through experience, integrating knowledge with context, emotion, and critical thinking. AI, particularly machine learning, excels at pattern recognition and statistical analysis. It identifies correlations in vast datasets, allowing it to make predictions and classifications. However, this doesn't equate to true comprehension.

-

AI's pattern recognition operates without genuine understanding. AI systems lack the contextual awareness and critical thinking skills humans possess. They can't extrapolate beyond the data they've been trained on.

-

Examples of AI failures highlight this limitation:

- Bias in datasets leading to discriminatory outcomes: AI trained on biased data will perpetuate and even amplify those biases, resulting in unfair or discriminatory outcomes in areas like loan applications or criminal justice. This is a significant concern in "algorithmic bias."

- Unexpected inputs causing system errors: AI struggles with novel situations or inputs that deviate significantly from its training data. This "out-of-distribution" problem can lead to unexpected and potentially harmful errors.

- Inability to adapt to novel situations beyond trained parameters: AI systems are often brittle and lack the adaptability of human intelligence. They can't easily generalize their knowledge to new and unforeseen circumstances.

-

The "black box" problem: Many sophisticated AI algorithms, especially deep learning models, are opaque. Their decision-making processes are difficult, if not impossible, to understand, raising concerns about explainability and accountability. This lack of explainable AI (XAI) hinders our ability to identify and correct biases or errors. The limitations of machine learning in this context are vast.

The Ethical Implications of Unethical AI

The societal impact of biased or poorly designed AI systems is profound. The ethical implications of AI are far-reaching and demand careful consideration.

-

Algorithmic discrimination: Biased AI systems can perpetuate and amplify existing social inequalities, leading to discriminatory outcomes in areas like:

- Loan applications: AI-powered credit scoring systems may discriminate against certain demographics.

- Hiring processes: AI-driven recruitment tools may exhibit bias against particular genders or ethnic groups.

- Criminal justice: AI algorithms used in risk assessment may unfairly target specific populations.

-

Spread of misinformation and deepfakes: AI can be used to generate convincing but false content, leading to the spread of misinformation and deepfakes, which can undermine trust and destabilize societies.

-

Job displacement: Automation driven by AI may lead to significant job displacement in various sectors, requiring careful consideration of its economic and social consequences.

The responsibility for mitigating these risks lies with developers, implementers, and policymakers. Transparency and accountability are paramount in ensuring responsible AI deployment. Addressing "AI ethics" requires a proactive approach.

Building Ethical AI: Best Practices and Guidelines

Building ethical AI requires a concerted effort to address the limitations and potential harms associated with AI systems.

-

Diverse and representative datasets: Training AI models on diverse and representative datasets is crucial for mitigating bias. This involves careful data curation and preprocessing to ensure fairness and inclusivity. Mitigating data bias is key to "responsible AI development."

-

Bias detection and mitigation: Employing techniques to detect and mitigate bias in AI algorithms is essential. This includes using fairness-aware algorithms and regularly auditing AI systems for bias.

-

Ongoing monitoring and evaluation: Continuous monitoring and evaluation of AI systems are necessary to identify and address emerging biases or unintended consequences.

-

Human-in-the-loop systems and human oversight: Incorporating human oversight and feedback into AI systems can help to ensure accountability and mitigate risks. This involves designing systems where humans retain control and can intervene when necessary. "AI governance" is crucial here.

-

Ethical guidelines and regulations: Adherence to relevant ethical guidelines and regulations, such as the GDPR and OECD Principles on AI, is vital for ensuring responsible AI development. These regulations form the basis of an "AI governance framework."

The Future of Ethical AI Development

The future of ethical AI development hinges on several key advancements and initiatives.

-

Explainable AI (XAI): Advancements in XAI will improve transparency and accountability, enabling us to understand and scrutinize AI's decision-making processes.

-

Interdisciplinary collaboration: Building ethical AI requires collaboration between computer scientists, ethicists, social scientists, and policymakers.

-

Ongoing research and development: Continued research and development in AI ethics are essential for addressing emerging challenges and ensuring that AI benefits humanity.

-

Public education and engagement: Public education and engagement are crucial for fostering informed discussions about the implications of AI and shaping its future. "AI safety" and "AI transparency" are key concepts in this regard.

Conclusion: Towards a More Ethical Future with AI

Understanding the limitations of current AI systems and their ethical implications is crucial for responsible AI development and implementation. AI's pattern-recognition capabilities, while impressive, fall short of true human-like learning and understanding. This lack of understanding can lead to biases, errors, and societal harms. By prioritizing diverse datasets, bias mitigation techniques, ongoing monitoring, human oversight, and adherence to ethical guidelines, we can strive towards a future where AI truly benefits humanity. Dive deeper into ethical AI and help shape a future where technology serves us responsibly. Let's work together towards "ethical AI implementation" and "responsible AI development."

Featured Posts

-

Update New Covid 19 Variant And Increased Case Numbers Who Report

May 31, 2025

Update New Covid 19 Variant And Increased Case Numbers Who Report

May 31, 2025 -

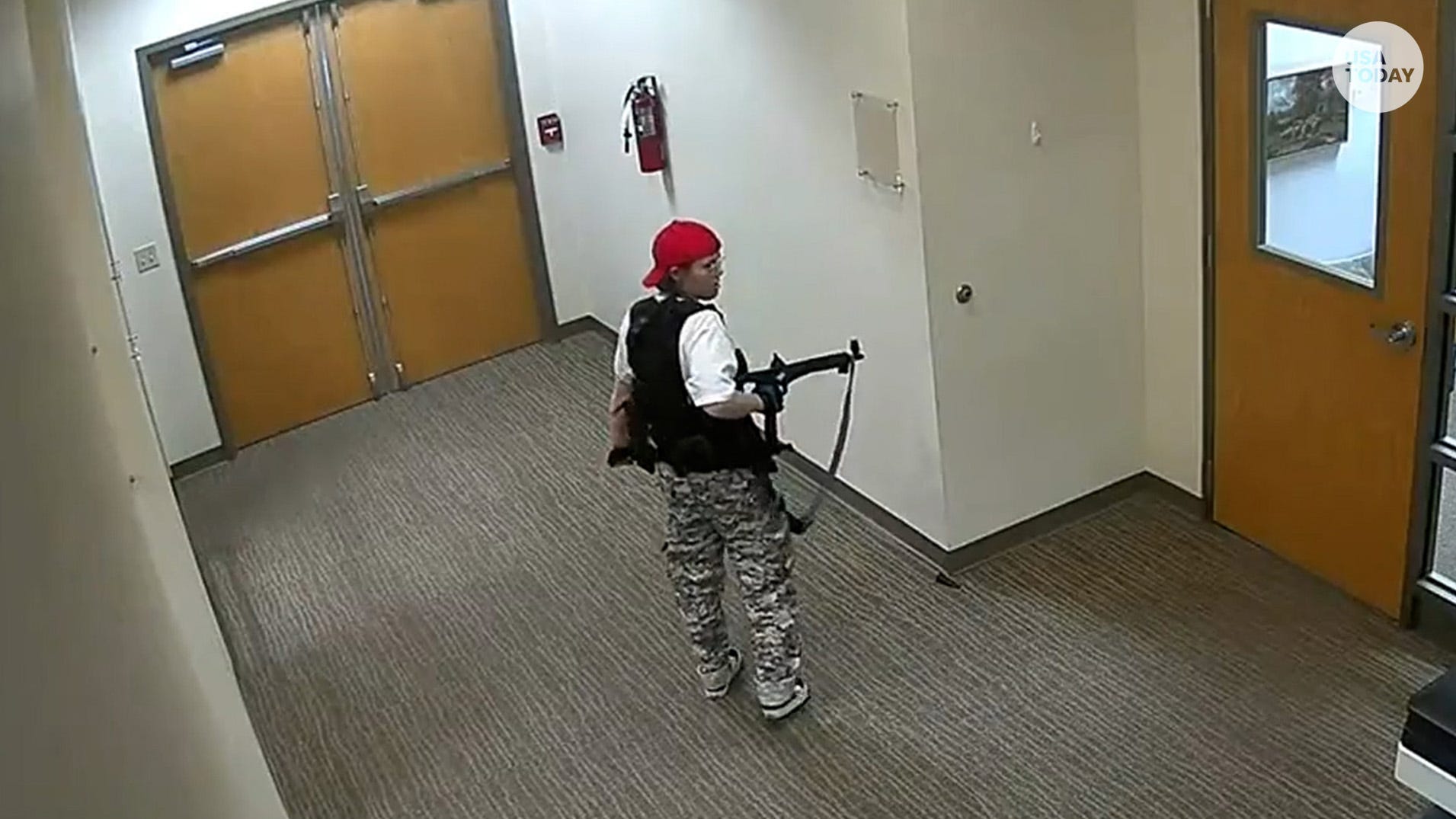

Do Algorithms Contribute To Mass Shooter Radicalization Holding Tech Companies Accountable

May 31, 2025

Do Algorithms Contribute To Mass Shooter Radicalization Holding Tech Companies Accountable

May 31, 2025 -

Report Scott Bessents Verbal Attack On Elon Musk Within Trumps Hearing

May 31, 2025

Report Scott Bessents Verbal Attack On Elon Musk Within Trumps Hearing

May 31, 2025 -

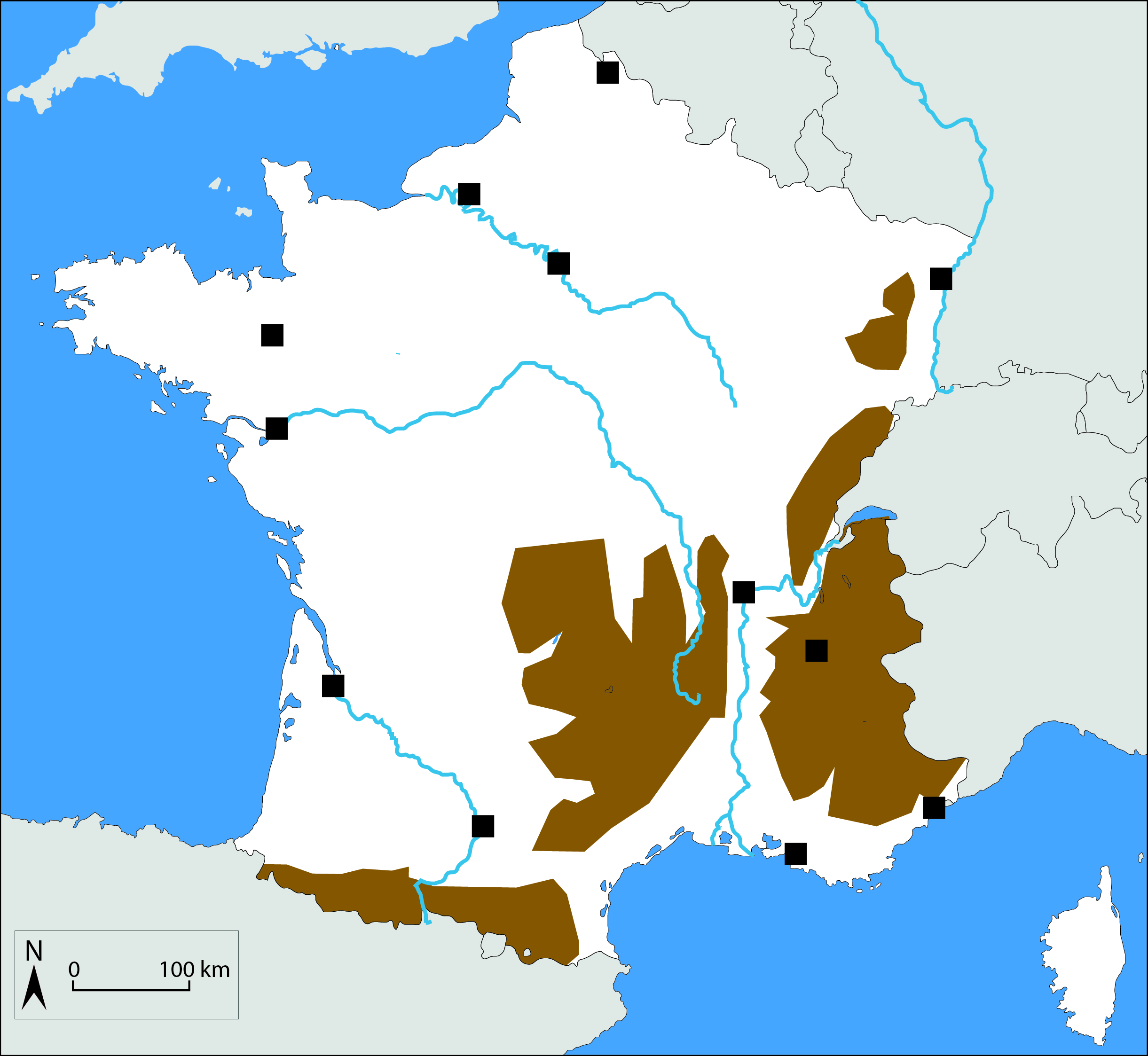

Port Saint Louis Du Rhone Le Rythme Des Mers Et Oceans Au Festival De La Camargue

May 31, 2025

Port Saint Louis Du Rhone Le Rythme Des Mers Et Oceans Au Festival De La Camargue

May 31, 2025 -

Canadian Wildfires A Public Health Emergency In Minnesota

May 31, 2025

Canadian Wildfires A Public Health Emergency In Minnesota

May 31, 2025