OpenAI Simplifies Voice Assistant Development: 2024 Developer Event Highlights

Table of Contents

Streamlined Speech-to-Text and Text-to-Speech Capabilities

OpenAI unveiled significant improvements to its speech-to-text and text-to-speech APIs, offering increased accuracy, faster processing speeds, and enhanced support for multiple languages. These enhancements are crucial for creating truly natural and effective voice interactions. The improvements translate directly into better user experiences and more efficient development processes for developers.

-

Improved accuracy in noisy environments: The new APIs boast significantly improved accuracy even in challenging acoustic conditions, making them ideal for real-world applications where background noise is common. This is achieved through advanced machine learning models trained on massive datasets of diverse audio recordings.

-

Faster real-time transcription capabilities: Real-time transcription is vital for many voice assistant applications. OpenAI's advancements deliver significantly faster processing, enabling more responsive and fluid interactions. This speed improvement is critical for applications demanding immediate feedback, such as live captioning or real-time translation.

-

More natural-sounding voice synthesis: The updated text-to-speech capabilities generate more natural and expressive speech, eliminating the robotic quality often associated with earlier voice synthesis technologies. This enhanced naturalness significantly improves user engagement and overall satisfaction.

-

Expanded language support for global reach: OpenAI's commitment to global accessibility is evident in the expanded language support. The APIs now support a wider range of languages, allowing developers to build voice assistants for a truly global audience.

-

Easier integration with existing developer workflows: The APIs are designed for seamless integration with popular programming languages and development frameworks, minimizing the learning curve and maximizing developer productivity.

Enhanced Natural Language Understanding (NLU) for Smarter Assistants

OpenAI's advancements in Natural Language Understanding (NLU) empower developers to build voice assistants that understand nuanced conversations, context, and user intent more effectively. This is achieved through sophisticated AI models capable of processing and interpreting complex linguistic structures.

-

Improved intent recognition and classification: The new NLU models accurately identify the user's intent, even when expressed in indirect or ambiguous ways. This ensures the voice assistant provides relevant and helpful responses, even in complex conversational scenarios.

-

Enhanced dialogue management for more natural interactions: The improved dialogue management capabilities allow for more natural and engaging conversations. The voice assistant can maintain context across multiple turns, leading to more fluid and less repetitive interactions.

-

Better context awareness for more relevant responses: The enhanced context awareness enables the voice assistant to understand the overall conversation flow and provide more relevant and insightful responses. This improves the overall user experience and makes the interaction feel more intuitive.

-

Advanced sentiment analysis capabilities: The ability to analyze the user's sentiment allows the voice assistant to adapt its responses appropriately, fostering more empathetic and engaging interactions.

-

Pre-trained models for faster development: OpenAI offers pre-trained NLU models, significantly reducing the development time and resources required to build sophisticated voice assistants.

New Tools and Resources for Easier Integration

OpenAI announced new SDKs, improved API documentation, and readily available tutorials to simplify the integration of their voice assistant technologies into various applications. This commitment to developer support is crucial for widespread adoption of these powerful tools.

-

New, user-friendly SDKs for popular programming languages: The new SDKs provide easy-to-use interfaces for integrating OpenAI's voice technologies into various applications, supporting popular programming languages like Python, JavaScript, and others.

-

Comprehensive and updated API documentation: Well-structured and comprehensive documentation is crucial for developers. OpenAI provides detailed and up-to-date API documentation, making it easier to understand and use the APIs effectively.

-

Interactive tutorials and code examples: Interactive tutorials and readily available code examples help developers quickly learn how to use the APIs and integrate them into their projects.

-

Expanded community forums and support resources: OpenAI provides robust community support through forums and other channels, where developers can ask questions, share knowledge, and collaborate.

-

Open-source contributions to foster collaborative development: OpenAI encourages open-source contributions, fostering a collaborative environment and accelerating the development of voice assistant technologies.

Focus on Privacy and Security in Voice Assistant Development

OpenAI emphasized its commitment to responsible AI development, outlining measures to ensure the privacy and security of user data in voice assistant applications. This commitment to ethical and responsible development is essential for building trust and ensuring the widespread adoption of these technologies.

-

Enhanced data encryption and anonymization techniques: OpenAI employs robust encryption and anonymization techniques to protect user data throughout its lifecycle.

-

Clear guidelines on data handling and user consent: OpenAI provides clear guidelines on how user data is handled and ensures users have full control over their data and consent.

-

Commitment to ethical AI development practices: OpenAI is committed to developing AI responsibly, considering ethical implications and societal impact.

-

Transparent data usage policies: OpenAI maintains transparent data usage policies, providing users with clear information on how their data is used.

Conclusion

OpenAI's 2024 Developer Event demonstrated a significant leap forward in simplifying voice assistant development. The improved APIs, enhanced NLU capabilities, and readily available resources empower developers to build more sophisticated, intelligent, and user-friendly voice assistants. By leveraging these advancements, developers can create innovative voice-activated experiences across a wide range of applications. Start building your next-generation voice assistant today with OpenAI's powerful tools and resources. Explore the possibilities of simplified voice assistant development with OpenAI!

Featured Posts

-

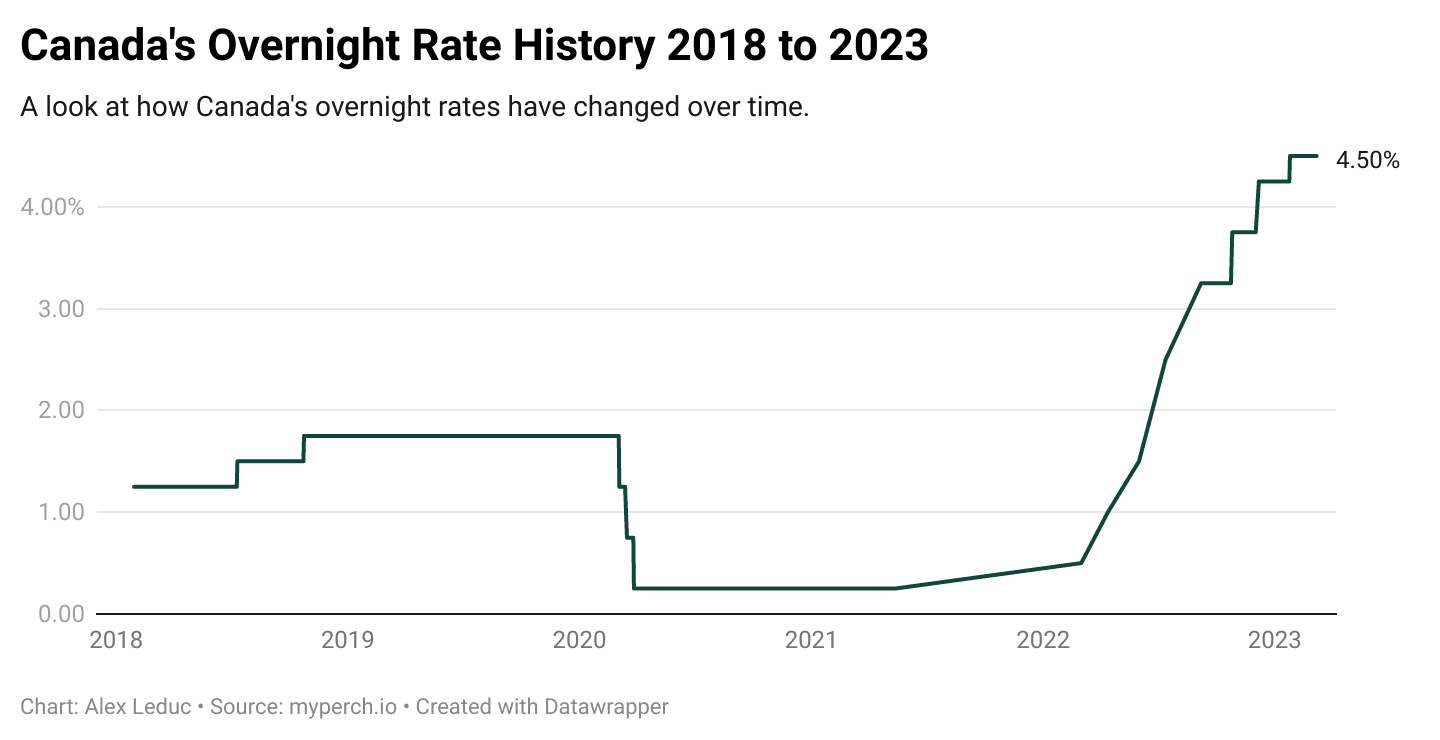

Bank Of Canada Rate Pause Expert Analysis From Fp Video

Apr 22, 2025

Bank Of Canada Rate Pause Expert Analysis From Fp Video

Apr 22, 2025 -

Addressing High Stock Market Valuations Bof As Argument For Investor Confidence

Apr 22, 2025

Addressing High Stock Market Valuations Bof As Argument For Investor Confidence

Apr 22, 2025 -

Ohio Derailment Persistent Toxic Chemical Contamination In Buildings

Apr 22, 2025

Ohio Derailment Persistent Toxic Chemical Contamination In Buildings

Apr 22, 2025 -

Russias Renewed Offensive Ukraine Faces Deadly Aerial Barrage Us Pushes For Peace

Apr 22, 2025

Russias Renewed Offensive Ukraine Faces Deadly Aerial Barrage Us Pushes For Peace

Apr 22, 2025 -

Tracking The Karen Read Murder Trials A Year By Year Account

Apr 22, 2025

Tracking The Karen Read Murder Trials A Year By Year Account

Apr 22, 2025